Challenge Overview

Problem Statement | |||||||||||||

Prize Distribution

BackgroundAccording to Wikipedia, "Mud logging is the creation of a detailed record (well log) of a borehole by examining the cuttings of rock brought to the surface by the circulating drilling medium (most commonly drilling mud)." Quartz Energy has provided Topcoder with a set of mud logs and we���re developing an application to extract structured meaning from these records. The documents are very interesting - they are even oil-well shaped! You can read more details about them here. If oil is revealed in a well hole sample, a "Show" may be recorded in logs. This is one of the most important pieces of information in the mud logs. Our first attempt to gather information from these files is going to be to find the relevant mud logging terms within the text of these mud logs. The mud logging terms relevant for this contest (phrases) are split into the following categories:

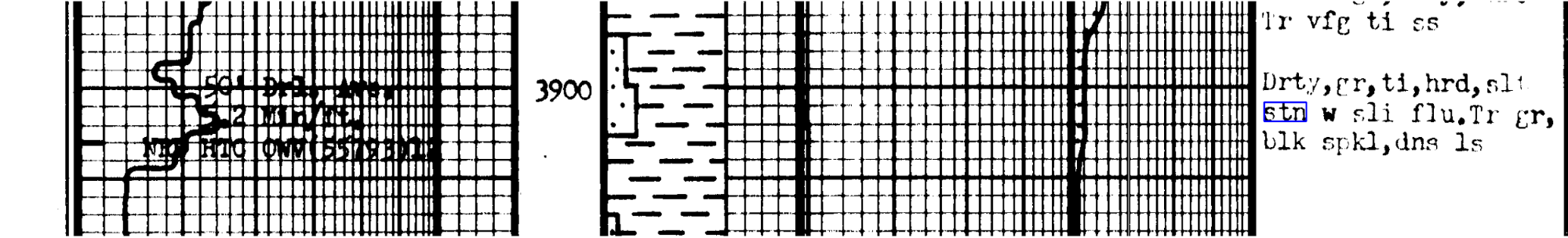

In this contest, all of the above phrases are equally important. ObjectiveYour task is to identify all occurrences of the relevant mud logging terms, hereafter referred to as "phrases", in a set of input mud log images. Several previous development challenges and additional modifications resulted in a baseline solution which can be found here. See the included readme for details on how to use this solution. The final result of this solution is the population of a database which would then need to be exported to a CSV file. You may utilize and build upon this codebase if you desire, but this is not a requirement. The previous challenges were Python only, but you are not restricted to only using Python in this contest. The phrases your algorithm returns will be compared to the ground truth data, and the quality of your solution will be judged according to how well your solution matches the ground truth. See the "Scoring" section for details. The baseline solution achieves a score of approximately 105,000 on the testing set. You must improve upon this result and achieve a final system score of at least 110,500 in order to be eligible for a prize. Input Data FilesThe only input data which your algorithm will receive are the raw mud log images in TIFF format. These image files typically have a height much larger than their width. The maximum image width is 10,000 pixels and the maximum image height is 200,000 pixels. While these images contain a large amount of information, we are only interested in the identification of relevant mud logging phrases. The training data set has 205 images which can be downloaded here and the ground truth can be downloaded here. The ground truth file has the same columns as the output file described below. You can use this data set to train and test your algorithm locally. The testing data set, containing only images, has 205 images and can be downloaded here. This image set has been randomly partitioned into a provisional set with 61 images and a system set with 144 images. The partitioning will not be made known to the contestants during the contest. The provisional set is used only for the leaderboard during the contest. During the competition, you will submit your algorithm's results when using the entire testing data set as input. Some of the images in this data set, the provisional images, determine your provisional score, which determines your ranking on the leaderboard during the contest. This score is not used for final scoring and prize distribution. Your final submission's score on the system data set will be used for final scoring. See the "Final Scoring" section for details. A small portion of a black-and-white mud log image is shown below. In this image, the relevant mud logging phrases are in the rightmost section. For illustration purposes only, the phrase "stn" has been correctly identified and its bounding box has been highlighted in blue.

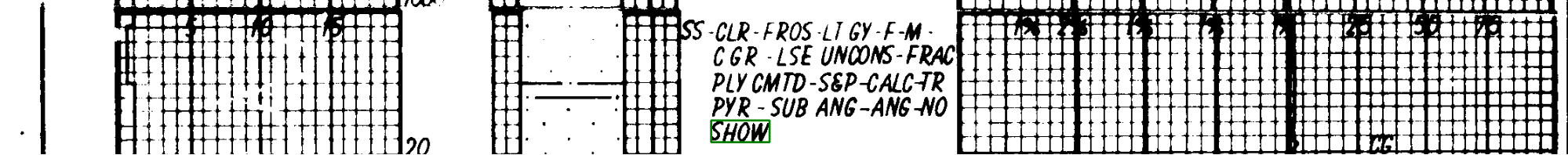

Note: For phrases which appear to span multiple lines, the text on each line should be considered independently. In the below example of a mud log, it may appear that the final phrase in this text block is "no show". However, for this contest, you should consider this to be two separate phrases "no" and "show". For illustration purposes only, the phrase "show" has been correctly identified and its bounding box has been highlighted in green.

Output FileThis contest uses the result submission style. For the duration of the contest, you will run your solution locally using the provided provisional data set images as input and produce a CSV file which contains your results. Your output file must be a headerless CSV file which contains one phrase per row. The CSV file should have the following columns, in this order:

Therefore, each row of your result CSV file must have the format: <IMAGE_NAME>,<OCR_PHRASE>,<X1>,<Y1>,<X2>,<Y2>Image name and phrase should not be enclosed in quotation marks, and each phrase should be composed of only lower case alphabet letters and spaces. For example, one row of your result CSV file may be: 1234567890_sample.tif,oil stain,123,456,200,500FunctionsDuring the contest, only your output CSV file, containing the provisional results, will be submitted. In order for your solution to be evaluated by Topcoder's marathon match system, you must implement a class named MudLogging, which implements a single function: getAnswerURL(). Your function will return a String corresponding to the URL of your submission file. You may upload your output file to a cloud hosting service such as Dropbox or Google Drive, which can provide a direct link to the file. To create a direct sharing link in Dropbox, right click on the uploaded file and select share. You should be able to copy a link to this specific file which ends with the tag "?dl=0". This URL will point directly to your file if you change this tag to "?dl=1". You can then use this link in your getAnswerURL() function. If you use Google Drive to share the link, then please use the following format: "https://drive.google.com/uc?export=download&id=XYZ" where XYZ is your file id. Note that Google Drive has a file size limit of 25MB and can't provide direct links to files larger than this. (For larger files the link opens a warning message saying that automatic virus checking of the file is not done.) You can use any other way to share your result file, but make sure the link you provide opens the filestream directly, and is available for anyone with the link (not only the file owner), to allow the automated tester to download and evaluate it. An example of the code you have to submit, using Java:

public class MudLogging

{

public String getAnswerURL()

{

// Replace the returned String with your submission file's URL

return "https://drive.google.com/uc?export=download&id=XYZ";

}

}

Keep in mind that your complete code that generates these results will be verified at the end of the contest if you achieve a score in the top 10, as described later in the "Requirements to Win a Prize" section, i.e. participants will be required to provide fully automated executable software to allow for independent verification of the performance of your algorithm and the quality of the output data. ScoringExample submissions will be scored against the entire training data set. Provisional submissions should include predictions for all images in the testing data set. Provisional submissions will be scored against the provisional image set during the contest. Your final provisional system will be scored against the system image set at the end of the contest. Phrase predictions will be scored against the ground truth in the following way. The overlap factor, O(A, B), between 2 bounding boxes, A and B, is the area of the intersection of A and B divided by the area of the union of A and B. Overlap factor is always between 0 and 1. O(A, B) = area(A ��� B) / area(A ��� B) For each image, all of the predicted phrases for this image are iterated over in the order they appear in the csv file. For each image, all of the predicted phrases for this image are iterated over in the order they appear in the csv file. The counters numFp, numMatch, numMatchPhrase, and numMissed are initizlized to zero. For each phrase:

For every ground truth phrase which was not matched, numMissed is increased by 1. The score for this image is calculated as a weighted average over the matching counters: imageScore = max(-8 * numFp + numMatch + 2 * numMatchPhrase - numMissed, 0)The total score across all images, sumImageScore, is the sum of each individual image score. The maximum possible total score is 3 times the total number of phrases in the ground truth, numPhrasesGt. Final normalized score: score = 1,000,000 * sum(imageScore) / (3 * numPhrasesGt)Final ScoringThe top 10 competitors with system test scores of at least 110,500 are asked to participate in a two phased final verification process. Participation is optional but necessary for receiving prizes. Phase 1. Code Review Within 2 days from the end of submission phase you must package the source codes you used to generate your latest submission and send it to jsculley@copilots.topcoder.com and tim@copilots.topcoder.com so that we can verify that your submission was generated algorithmically. We won't try to run your code at this point, so you don't have to package data files, model files or external libraries, this is just a superficial check to see whether your system looks convincingly automatized. If you pass this screening you'll be invited to Phase 2. Phase 2. Online Testing You will be given access to an AWS VM instance. You will need to load your code to your assigned VM, along with three scripts for running it:

Your solution will be validated. We will check if it produces the same output file as your last provisional submission, using the same provisional testing input files used in this contest. We are aware that it is not always possible to reproduce the exact same results. For example, if you do online training then the difference in the training environments may result in a different number of iterations, meaning different models. Also, you may have no control over random number generation in certain 3rd party libraries. In any case, the results must be statistically similar, and in case of differences you must have a convincing explanation why the same result can not be reproduced. Competitors who fail to provide their solution as expected will receive a zero score in this final scoring phase, and will not be eligible to win prizes. General Notes

Requirements to Win a PrizeIn order to receive a prize, you must do all the following:

| |||||||||||||

Definition | |||||||||||||

| |||||||||||||

Examples | |||||||||||||

| 0) | |||||||||||||

| |||||||||||||

| 1) | |||||||||||||

| |||||||||||||

| 2) | |||||||||||||

| |||||||||||||

This problem statement is the exclusive and proprietary property of TopCoder, Inc. Any unauthorized use or reproduction of this information without the prior written consent of TopCoder, Inc. is strictly prohibited. (c)2020, TopCoder, Inc. All rights reserved.