Challenge Overview

This is the Medium - 500 point problem of RDM-2

Consolidated Match Leaderboard is available here.

Please Note - The files linked in the specs will only be accessible after you register for the challenge.

Problem Description

Brian lives in a small apartment in an expensive city. Since he started working from home and he doesn't have to go to the office very often, he has been thinking of setting up a cosy home office in a small room in his apartment. He has to be creative with the small space he has available because space is at a premium. He needs

- a sofa,

- a wardrobe,

- a bookcase and

- a desk.

and he wants to place them close to the walls such that he maximizes the free area in the centre of the room. The longest side of each item has to be against a wall (completely touch the wall) and for each item a different wall should be used for the longest side to touch. The other sides may be against a wall too but it's not mandatory. The room has a door and it needs a square-shaped area for opening/closing so that area is considered occupied. The door area will always be in a room corner (touches 2 walls). The items should not overlap with each other and they also should not overlap with the door area.

After a lot of browsing, he saves a list of items in the following format: id, name, type (one of the following: sofa, wardrobe, bookcase, desk), price, dimensions (width x depth in cm). An example input CSV file will be provided in the forum: (items.csv).

He hasn't paid a lot of attention to the dimensions of the items so there might be combinations of items that don't fit according to the "against a wall" requirement. He figured he'd write a script to find all valid combinations that follow the requirement.

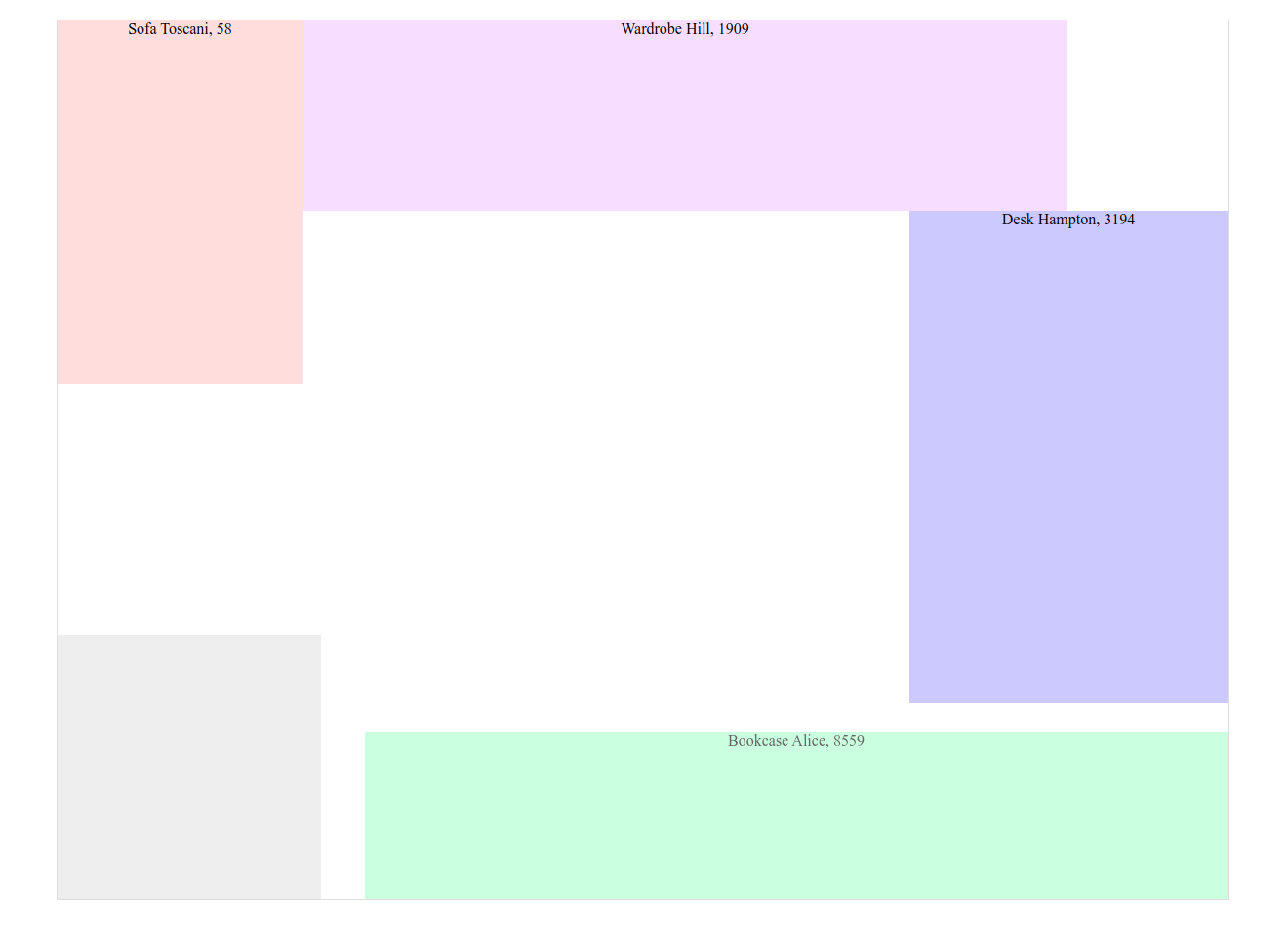

Example floor plan with items positioned correctly:

Keep in mind that for most of the test cases there are multiple ways to position the items correctly. We only care if at least one is possible.

Write a CLI script that finds all valid (sofa, wardrobe, bookcase, desk) combinations based on the CSV file with the list of items, the room width, room depth and door length. These will be provided as parameters when running the CLI app. The output file should be a CSV file that contains a valid and unique combination of furniture items on each line with this format <id_type1>, <id_type2>, <id_type3>, <id_type4> where type* is one of the 4 types: sofa, wardrobe, bookcase, desk. In case there is no valid combination, an empty file should be returned.

The script should be converted to an executable so it can be executed globally. This part is already implemented in the sample Dockerfile* files provided in the sample submission. But you might need to update the steps in case you are using a different language not covered by the samples or if you need some custom steps. Please read code/README.md for more details on what the tester expects. The global shell command should be able to be executed in the following way by the tester container:

>solution -i <items_file_path> -w <room_width> -d <room_depth> -l <door_length>

And outputs the result file name or its a relative path (no absolute path), e.g:

result.csv

Sample output file: output.csv

Constraints and Guidelines

- The file with furniture items will contain a minimum of 4 and a maximum of 100 lines.

- The width of the room won't exceed 400 cm and the depth of the room won't exceed 300 cm.

- The door area is a square and the door length won't exceed the rooms's depth.

- Please use the forums to ask any questions.

- Minimal code quality is expected, for the reviewer to review the submission and go through your code

- Obvious and deliberate code obfuscation will be rejected.

- Collaborating/cheating in any way with anyone else (member or not) during a rated event is considered cheating.

- An excessive amount of unused content should be avoided.

Technology Stack

Any Language that allows creating a CLI app that can be then converted to a shell command

Sample Submission

This challenge uses a purely automated testing approach to score the submissions so we are providing a sample submission and an automated tester with a basic test case assembled in a way that simulates the final testing. Docker is used to achieving this. Please read the README.md file to find out how to run the setup.

The sample submission will be in the code folder and it should be extended to implement the requirements. Make sure to read the guidelines from the README.md file before starting to implement your submission.

Sample submission with local tester: rdm2-medium-sample-and-tester.zip

Sample submission to submit on the platform: rdm2-medium-sample-submission.zip

Important Make sure you don't submit the executable file you generated locally (solution). While testing locally, the binary file will be generated in code/src and it should be removed before archiving and uploading your submission.

Final Submission Guidelines

Submission Deliverables

Your submission must be a single ZIP file not larger than 10 MB containing just the code folder with the same structure as the one from the sample submission. The sample tester should not be included in the submission. Also make sure you don't submit any build folders generated locally like node_modules, dist etc.

You must follow this submission folder structure so our automated test process can process your scoring:

- Create a folder with “code” as the folder name then zip.

- Inside the “code” folder, there needs to be a file named Dockerfile. This is the docker file used to build the user’s submission. Refer to the provided Docker file in Sample Submission for each level.

- Zip that “code” folder and submit to the challenge.

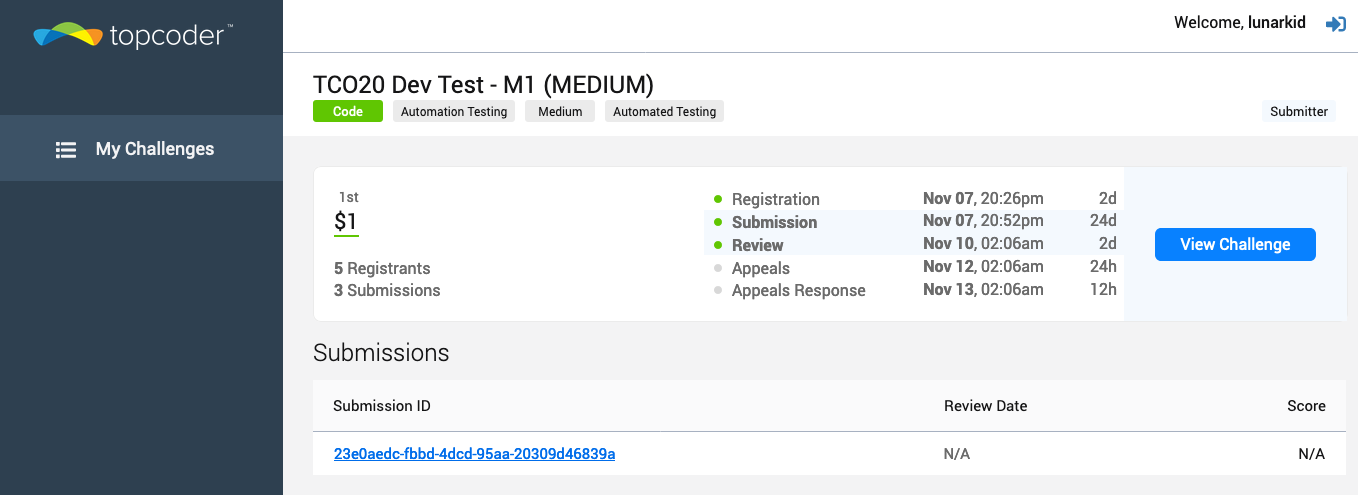

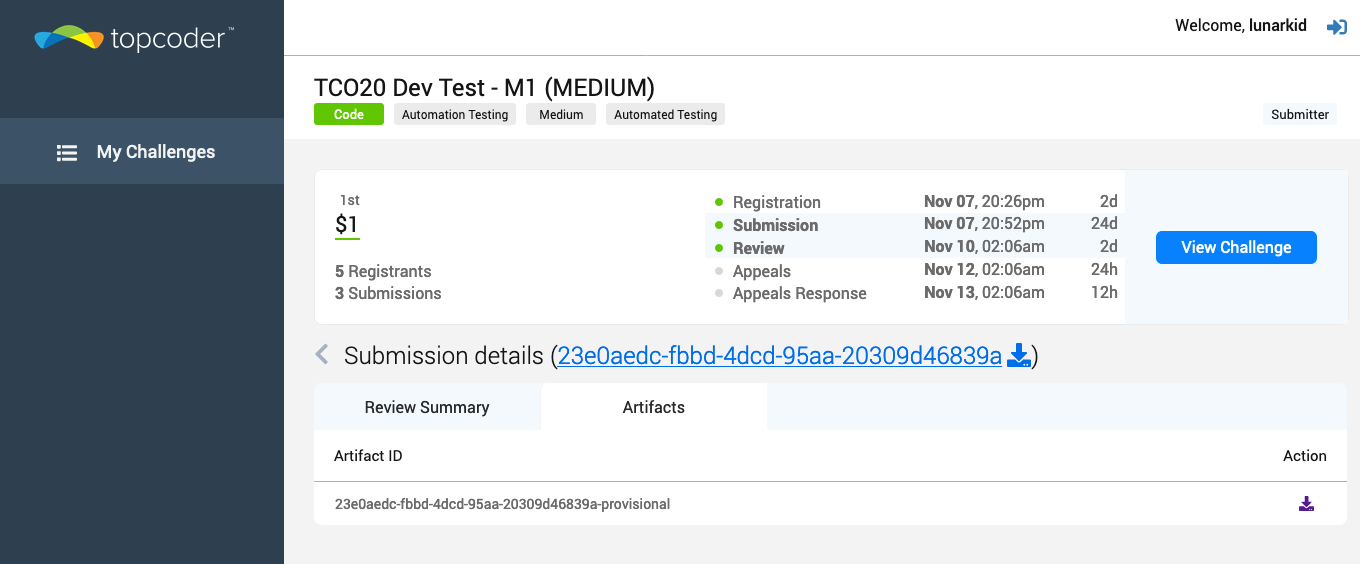

Execution Details and Submission Logs

Each time you submit, the platform will leverage Docker to run your code. The execution logs will be saved as “Artifacts” that can be downloaded from the Submission Review App: https://submission-review.topcoder.com/.

Checking Passing and Failing Test Cases

Using the Submission Review App (https://submission-review.topcoder.com/), navigate to the specific challenge, then to your submission, and then to the Artifacts for your submission. The zip file you download will contain information about your submission including a result.json file with the test results.