June 28, 2018

Development Review - Role & Responsibilities

The review process is a critical phase in the Topcoder challenge lifecycle. Reviews are crucial for maintaining top quality for customer deliverables, determining challenge winners, as well as providing opportunities for skills growth via feedback for participating members of each challenge. Reviewers and the review process is what makes the Topcoder methodology unique and provides a tremendous amount of value to all Topcoder participants.

In order to understand your role and responsibilities as a reviewer, you’ll need to understand the overall review process. Take a look at these other articles to get an overview.

You’ll also need to be familiar with Online Review, where the reviews are managed for Topcoder.

How to Become a Reviewer

If you are an active participant and interested in joining the Topcoder Development Review Board, please visit the reviewer qualification page for details.

The Reviewer Mindset

There’s more to being a great reviewer than just filling out a scorecard. We rely on our reviewers to take ownership of the success and overall quality of the challenge output. You will be representing Topcoder in your actions and in your communications, and it’s important to make sure you’re prepared for what’s ahead. Here’s some advice from some of our experienced reviewers about how to approach the role.

Just as a challenge submission must be solely the work of the submitter, reviews must be done solely by the reviewer assigned to that review.

Always apply common sense to all aspects of a review. If you can’t understand a good reason for doing something, then perhaps it shouldn’t be done. If you have any questions or doubts during a review, please contact the challenge manager/copilot for your project via the Contact Manager link in Online Review. Asking questions early will lead to better quality reviews and will help prevent a long appeals process.

It is critical you are prepared to do a proper review. Make sure you feel comfortable with a challenge before applying for review positions. Be prepared and ensure you understand the requirements, technology, and desired goals for the challenge.

Do not apply if you are just learning the technology. You should be a competitor in those cases. Doing this is grounds for removal from the review board.

It is your responsibility to review the forum for clarifications or adjustments that were made during the challenge. If something is unclear, contact the challenge manager/copilot.

Plan ahead and start your reviews early so you are not rushing at the end. All the timelines are available at the start of a challenge. Set aside the time and plan accordingly. Occasionally, reviewer deadlines may change as a challenge is in progress due to all reviews being finished early or if multiple rounds of final fixes are required. Make sure your schedule is flexible enough to complete all your responsibilities on time even if the overall timeline changes slightly.

When completing a scorecard, provide detailed review comments that are supported by objective facts. You are responsible for including details such as specific references to challenge requirements or forum posts that support your scores. This will make the appeals process much smoother.

Be as respectful and positive as you can in your review comments. You are leaving feedback for your fellow members in the Topcoder community and positive, constructive feedback will benefit everyone.

You will encounter submissions that solve for a solution in a different way than you would have, but this should not influence your review. Conduct your review based on how the submission meets the requirements, not on how it meets your own ideal design or development or how it compares to other submissions. If you have suggestions on how to improve the submission you can write comments in the review. These suggestions are very welcome, but they should not influence the scoring of the review process.

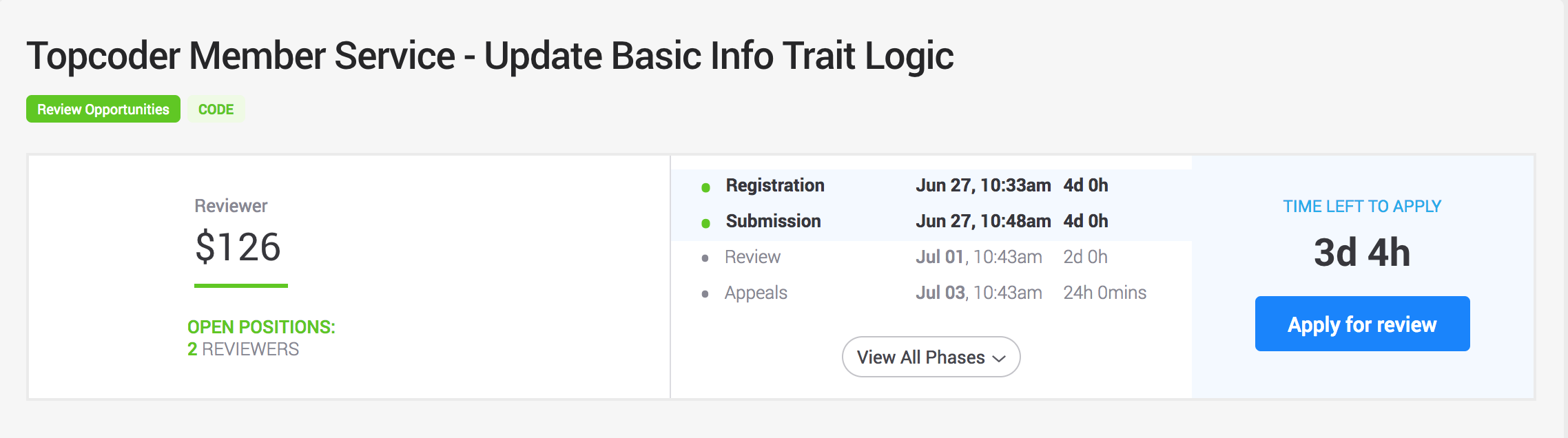

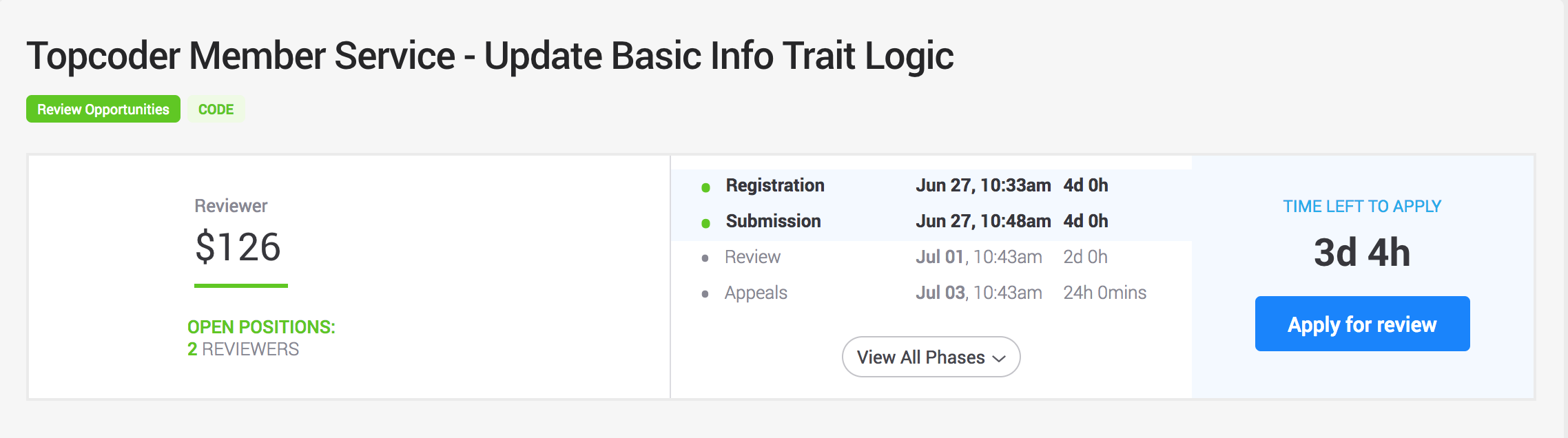

Applying for Review Opportunities

Once you have been invited to be a review board member you may apply to review challenges. Challenges with open review positions are listed under the Review Opportunities page. In order to find open review positions, do the following

Go to the challenge listings page.

Click Open for Review in the side nav on the right.

Before applying to review a challenge, take the time to study the goals, requirements, timeline, and ensure that you are proficient in the technology necessary to do the job. If you aren’t comfortable with the requirements or technologies for a specific challenge, you should not apply for it. Applying for reviews where you do not have the necessary skills will result in being suspended from the review board.

To apply for a review position, check the checkboxes for the positions you are interested in. The Topcoder review process has two types of reviewers: Primary and Secondary. The Primary Reviewer has additional responsibilities (screening, final review, aggregation) and is paid a higher amount to cover this additional work. Secondary reviewers are responsible only for the review and appeals process.

When you apply for a review opportunity you may apply to be both a Primary Reviewer and a Secondary Reviewer. You can apply for multiple positions if you feel comfortable with each of them, but the system will only assign you to at most one position. If you only apply for the Primary Reviewer and some else is selected, you will not be able to receive a Secondary Reviewer position.

Before applying for a Primary Reviewer position you should look at the estimated project phase timeline on the review opportunity details page. The Primary Reviewer is solely responsible for completing several phases of the review process and any delays in completing these tasks can delay the entire project. There is a lot of responsibility on the Primary Reviewer so make sure you are prepared to handle it before applying for the position.

To complete your application click the “Apply Now” button.

Reviewer Selection Algorithm

Topcoder is currently revamping this algorithm. Today, assignments are based on Topcoder’s evaluation of your previous reviews, technology skills, and your current workload. If you have any questions please email support@topcoder.com.

How to Perform a Review

Review scorecards are entered via Online Review (also referred to as “OR”). Any assigned review scorecards can be accessed on the challenge page in Online Review under the “Outstanding Deliverables” section. Simply click the link to open the scorecard you wish to enter.

How to Download Submissions to Review

Reviewers need to download submissions from Online Review in order to view them. To download a submission open Online Review and navigate to the challenge you are reviewing. The submissions can be downloaded by clicking on the submission ID under the “Review/Appeals” tab under the timeline table.

How to Complete a Scorecard

One of the main tasks a reviewer is responsible for is completing a review scorecard via Online Review. Scorecards are different for each track at Topcoder, and different challenges within a track may even have a different scorecards. As a reviewer, it is your job to read the scorecard carefully and make sure you follow the scoring guidelines explained for each section.

You may open the scorecard by viewing the challenge in Online Review and clicking on the scorecard link under “Outstanding Deliverables”. Any assigned scorecards (including reviews, appeals responses, aggregation, or final review) will be listed under this section. After completing a scorecard you can choose to save the scorecard to revisit later or submit it by clicking the buttons at the bottom of the scorecard.

Don’t forget to use the “Save for Later” button at the bottom of the review scorecard often so you don’t lose your work!

How to Answer Scorecard Questions

For each scorecard question you will need to select a score using the dropdown menu on the “Response” column (on the right side of the screen):

The score you select must follow the scoring guidelines described in the question description. If this question description isn’t displayed you may click on the ‘+’ icon to the left of the question number to expand the description.

In addition to a score, you must also enter a comment that explains the score you selected. Be sure to include supporting details and objective facts to justify your score. Online Review includes an “Add Response” button under each comment:

You may use this button to leave multiple comments for any question on the scorecard. For each question, you should enter one comment for each issue you find. Providing multiple comments makes it much easier for submitters to appeal specific comments, and it makes the aggregation, final fix, and final review phases much easier to manage for everyone.

How to Approach Reviews

View Each Review in Isolation

A reviewer should never, for any reason, compare submissions in order to assign scores. Each submission should be reviewed in a vacuum. For this reason, justifying an appeal response based on “the score being fair because all other submissions were treated in the same way” is invalid. Every scorecard and every appeal response should stand by itself. You should always score a submission based on how well it meets the challenge requirements and scorecard guidelines (NOT on how it compares to other submissions). To help avoid comparing submissions you may want to focus on reviewing one submission at a time and completing the entire scorecard before even looking at the next submission.

Provide Clear Reasoning

Every scorecard should be self-explanatory. At any point in time, anybody should be able to read the scorecard and easily understand why each item was scored the way it was. This means if you score a submission down, you need to have corresponding reasons documented in the scorecard. The competitors should never need a clarification in order to appeal, the challenge admin should never need a clarification from you in order to determine whether an appeal was properly answered or not, and website visitors shouldn’t need to guess in order to know what you thought of the submission. Every item in the scorecard needs to be properly explained and justified. This means including facts and references in your review comments.

Also, make sure to add a separate response for every point you want to make. Do not combine several points into the same item, but use the “Add Response” button instead. Using separate items will make it much easier for submitters to appeal each individual item. It also makes the tasks of aggregation, final fixes, and final review much easier.

Approaching Overlapping Scores

Sometimes a project will have a problem that several different review items address. In this case, the decision on how to score is up to you. A very small problem probably deserves to be scored down only once, while a large problem probably justifies adjusting the score in multiple items. When doing this, please explicitly reference the item that the score is overlapping from so the submitter can see where the score comes from. When deciding which item to score down (or up, this principle also applies to enhancements), you should use the more specific items first.

Reviewers should use their best judgment and common sense when overlapping scores. Submitters spend days working on their submissions and it’s not fair to fail a submission for a minor item that has been scored down in multiple sections of the review scorecard. If you determine that a problem with a submission is minor, pick the most relevant scorecard section and only score it down there.

Reviewers can avoid over penalizing a submission with overlapping scores by leaving comments in multiple scorecard sections but only scoring the problem down in the most relevant scorecard section. Leaving comments without taking points off is helpful in later stages of the review and ensures the problem will be corrected during final fixes.

Review Item Comments

Be clear and factual in your comments. Including specific references, such as page numbers or forum posts, is helpful for everyone involved in the review process. If the point you are trying to make is complicated or difficult to understand you may want to consider re-stating your point in multiple ways or providing an example.

The Topcoder community is made up of members from all over the world. Many of these members speak English as a second language. Reviewers need to be aware of potential language barriers and avoid using vocabulary that is unnecessarily complex.

Distinguish Between Required vs. Recommended

An item should only be marked “Required” if it affects the submission’s ability to meet the project requirements as outlined in the challenge requirements specification and forum discussions. These include all issues that may block the solution from working in production, including bugs and poorly written code that could affect functionality, scalability and/or performance, but also code hygiene issues including documentation, test cases and potential deployment problems. All other items are to be marked recommended. Reviewers should not mark items required out of personal preference. This practice is detrimental to the quality of challenge deliverables, as it tends to cause a lot of unnecessary final fixes where issues are much more likely to get introduced since final fixes are only reviewed by one person. Additionally, reviewers can leave comments on their scorecard by marking the item as “Comment”.

It’s very important for the aggregation, final fix, and final review phase that items be correctly classified on your scorecard.

Reviewing Maintenance Projects

Certain projects involve upgrading a previous submission or existing code. When reviewing these projects, you should only concern yourself with the modifications (and not the pre-existing code base). The submitter is only responsible for fixing any parts of the old code base that needed to be changed to fit the new requirements. They will not be penalized for existing code hygiene issues like formatting, documentation, etc.

If a submitter chooses to improve aspects of the old code base that are not addressed by the requirements, the best place to reward that extra work is the overall comments review item. We encourage submitters to overachieve, but the bulk of the scorecard is concerned with how the requirements are met and exceeded.

Leaving Feedback on Trivial Issues

Please concentrate on the core aspects of a submission. It is important to also consider the details, but you shouldn’t be spending time chasing down issues that have no impact on the overall design or development. For example, whether a Java interface’s methods are explicitly declared public or not has no bearing on the readability of the code, or on the resulting bytecode after compilation. This should not be considered an issue at all.

If you find trivial issues while reviewing a submission you may want to consider leaving feedback about the item without scoring the submission down. Harsh scoring of trivial issues has the potential to fail a submission and possibly even cause the challenge to fail if no submissions pass review. If a submission meets all the core aspects of a challenge requirements there is no reason it should fail due to trivial issues. Reviewers are welcome and encouraged to point out trivial issues, but these types of small issues should not have a major impact on the overall score. Use your best judgment on the severity of issues before entering a score.

Additional Items to Watch Out For

Reviews in development tracks that involve coding (Code, Assembly, F2F, UI Prototype, etc) may require some additional review steps that other challenge tracks don’t require. For example, testing code is required for reviews in these types of tracks.

Code Redundancy and Helpers

Submitters often use helper classes and methods to factor out certain common tasks such as parameter checking and SQL resource closing. There has been some debate as to whether using helpers for parameter checking is good or not. Topcoder’s official stance is that using or not using a helper for parameter checking is a minor detail that should not affect a submission’s score.

Subjective Reviews

Some development challenges will employ a subjective review. This means that it will be up to the reviewer (often the copilot and/or customer) to determine the winners based on how they interpret the submissions applicability to the challenge requirements. Specific scorecards are used in this case. You will find only 1 question in the scorecard that is used to rank the submissions from best to worse. Follow the guidelines in the scorecard. Note: The system currently won’t allow ties, so you’d have to rank them such that they have a unique placement. If you do set the same score on 2 of them, the earlier submission timestamp is the tie breaker.

Tests

Kinds of Tests

There are several different kinds of tests that can be used during the review process. Some challenges require tests, while others don’t. Please check the challenge requirements and scorecards for the challenge you’re reviewing. When tests are required, you may see the following classifications of test cases:

Stress - Stress reviewers should try to find the kinds of problems that only appear under heavy load. If a program leaks resources, the stress tests should find this. Similarly, if a component is supposed to be thread safe then the stress tests should try to find deadlocks and race conditions. Stress tests should also try to find inefficient implementations by timing them out.

Failure - Failure reviewers should concern themselves with whether the component handles error conditions as the design specifies. Trigger every kind of error the component should handle, and verify that the component handles it properly (throws the proper exception, doesn’t leak resources, etc.)

Accuracy - Accuracy reviewers should find out whether the component produces the right results for any valid input. Find and test the boundary cases, just like you would in an algorithm competition’s challenge phase.

Intermittent Test Failures

Sometimes, a test fails only once every few runs. Sometimes it will fail once, and then run properly many times in a row. These incidents must be investigated, since there are bugs that only show up rarely or when the system is under heavy load. Just because a tests runs properly once does not mean that the code is correct. Always run each test suite several times.

Validity

Tests should be valid for an indefinitely long period of time, as clients may wish to run the tests every time they deploy the code. Do not assume that temporary conditions (such as the existence of an external email address) will hold when the tests are run.

Moreover, tests should be valid on any environment. Assuming that a certain file or directory is read-only, for example, makes a possibly invalid assumption about the environment the tests will be run on. A much better approach would be to first find out if the conditions to run the test are met, and then decide whether to run it or not. Other common (and troublesome) assumptions include assuming that the directory separator character is either ‘/’ or ‘\’, and any other type of hard-coding.

One last comment about the environment setup: when writing tests, it is always better to replicate a real environment than to simulate it with mock up classes when possible. For example, a component that uses database persistence should be tested using a real database whenever possible.

Configuration

To address the above hard-coding problem, tests that need configurable information must use environment specific solutions. You can use whichever is most appropriate for the situation, but try to minimize the dependencies you add to the code. Common items to configure can be server addresses, file paths, database connection strings, etc. Test configuration files should reside under the test_files directory.

During final review, the primary reviewer should ensure that all tests are configurable and that, if possible, they all read from the same configuration file for database and other server connections. That way, the user of the component does not need to configure four separate files just to get the database connection working.

Code Origin

Tests should never utilize a submitter’s code. All code should be your own. The only exception to this is the following: when reviewing an upgrade to an existing code base, you may choose to reuse the existing tests from the previous version (provided that they are still valid).

Standard Reviewer Payments

Reviewer payments are determined by various factors such as challenge prize amount, the number of submissions that passed screening, and the track of the challenge. Each challenge type has a review incremental coefficient assigned to it, and the coefficients vary with each challenge type. Primary reviewers earn compensation for the screening payment, review payment, aggregation payment and final review payment. Secondary reviewers only earn compensation for the review payment.

Screening payment

The payment for the primary reviewer to do the screening (Primary Screener role in the Online Review) depends on the number of submissions and is computed in the following way:

Screening Payment = N * SIC * Prize

where N is the number of submissions, SIC is the Screening Incremental Coefficient (see the table below) and Prize is the 1st place prize value of the competition.

Review payment

The payment for doing the review (Reviewer, Accuracy Reviewer, Failure Reviewer, Stress Reviewer roles in the Online Review) depends on the number of submissions which passed screening and is computed in the following way:

Review Payment = (BRC + N * RIC) * Prize

where N is the number of submissions which passed screening, BRC is the Base Review Coefficient (see the table below), RIC is the Review Incremental Coefficient (see the table below) and Prize is the 1st place prize of the competition.

Aggregation payment

The payment for doing the aggregation (Aggregator role in the Online Review) is 10$ for all development competitions.

Final Review payment

The payment for doing the final review (Final Reviewer role in the Online Review) is computed in the following way:

Final Review Payment = FRC * Prize

where FRC is the Final Review Coefficient (see the table below) and Prize is the 1st place prize of the competition.

Review Payment Coefficients

| Type | SIC | BRC | RIC | FRC |

|---|---|---|---|---|

| Architecture | 0.01 | 0.12 | 0.05 | 0.05 |

| Assembly & Code | 0.01 | 0.13 | 0.05 | 0.05 |

| Test Suites | 0.01 | 0.12 | 0.05 | 0.03 |

| F2F | 0 | 0.02 | 0.04 | 0 |

| Other tracks | 0.01 | 0.08 | 0.03 | 0.03 |

Late Deliverables

Topcoder tracks all late deliverables in Online Review in order to improve the quality and the reliability of the software development process.

Each project in Online Review consists of a set of phases each with its own timeline. Failure to meet the timeline for any of the phases leads to delay of the entire Online Review project which in turn delays the dependent projects. Whenever a member fails to meet the current timeline, it will be logged into the system for the further investigation. These statistics will be used by Topcoder to identify weak places in the process and to apply proper disciplinary measures to the late members if needed.

A lateness penalty will be applied to the reviewer’s payment if a reviewer is late for any of their deadlines without a good reason, such as a phase opening earlier than expected, delays with requisitioning environments, or outstanding questions. If there are good reasons for delays, make sure you communicate them to the challenge manager/copilot as early as possible. The amount of money lost for a lateness penalty will depend on how long of a delay it causes.

Previous Late Deliverables

One of the factors we rate reviewers on is on time completion of deliverables. If you would like to review your previous missed timelines you can use the Late Deliverables Search page to view your past statistic and the details of all of your late deliverables.

Payment Penalties

The payment for the late members in Online Review is reduced based on their total delay. The total member’s delay for a project is the sum of all his/her unjustified late records for this project. The payment will be reduced by 5% if there’s a non-zero delay plus 1% for each full hour.

Additionally, each unjustified rejected final fix reduces the winner’s payment by 5%.

The maximum possible penalty is 50%.