December 23, 2021

Azure DataBricks ETL

This article will discuss using Azure Databricks ETL. You can use Azure Databricks as a software on cloud to execute Spark.

Introduction

Data can be Extracted, Transformed, and Loaded (ETL) from one source to another using an ETL tool. Azure Databricks ETL provides capabilities to transform data using different operations like join, parse, pivot rank, and filter into Azure Synapse.

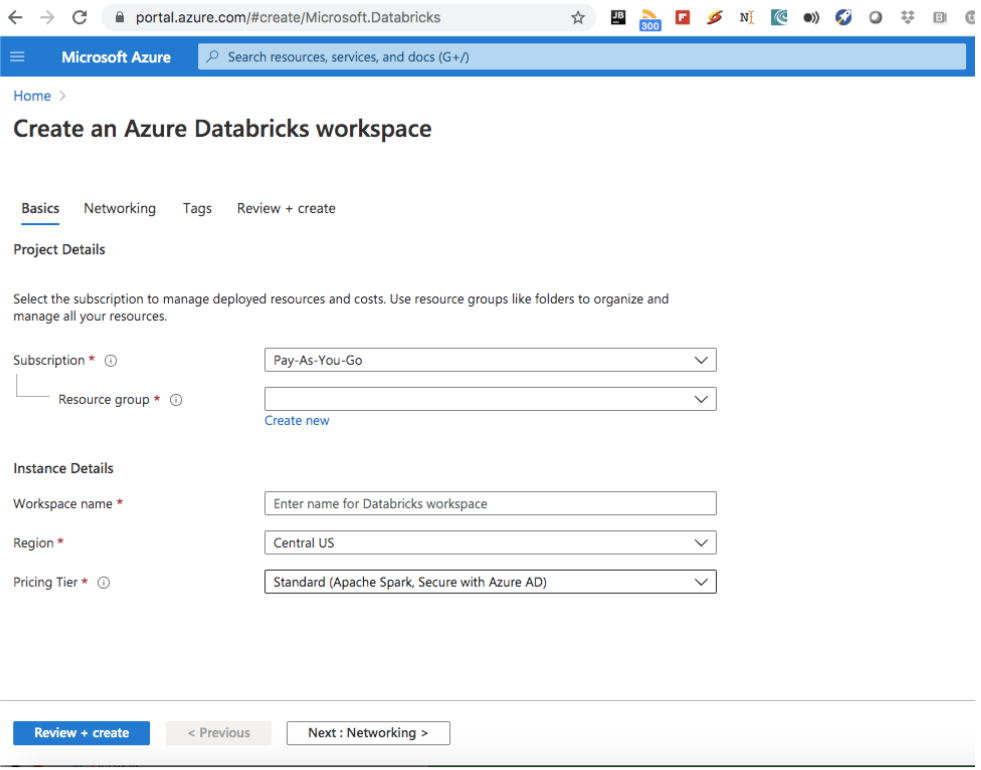

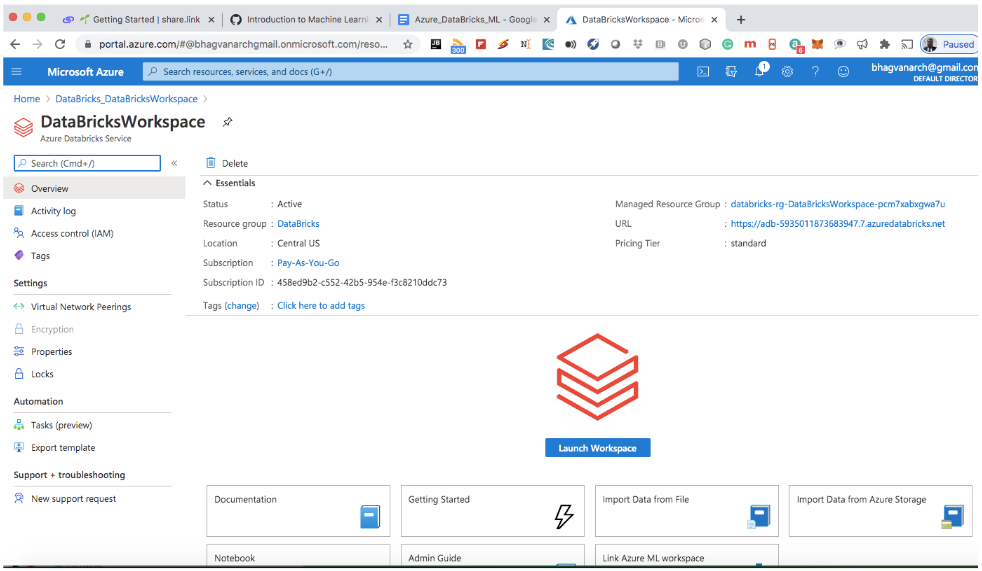

Creation of Azure Databricks service

You can use Databricks on trial or pay as you go. The pay as you go model depends on the usage of underlying services utilized and virtual machines.

You can create a Databricks account as shown below:

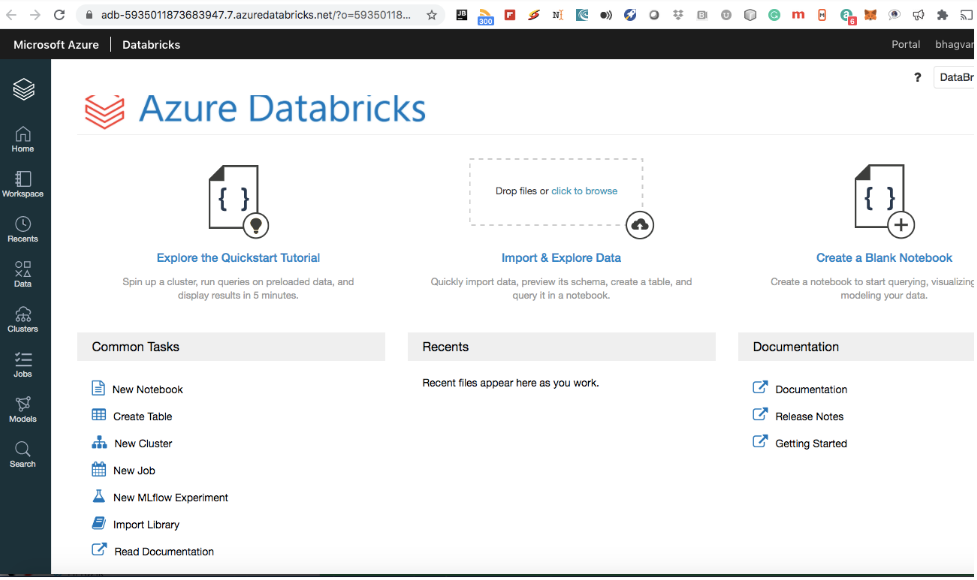

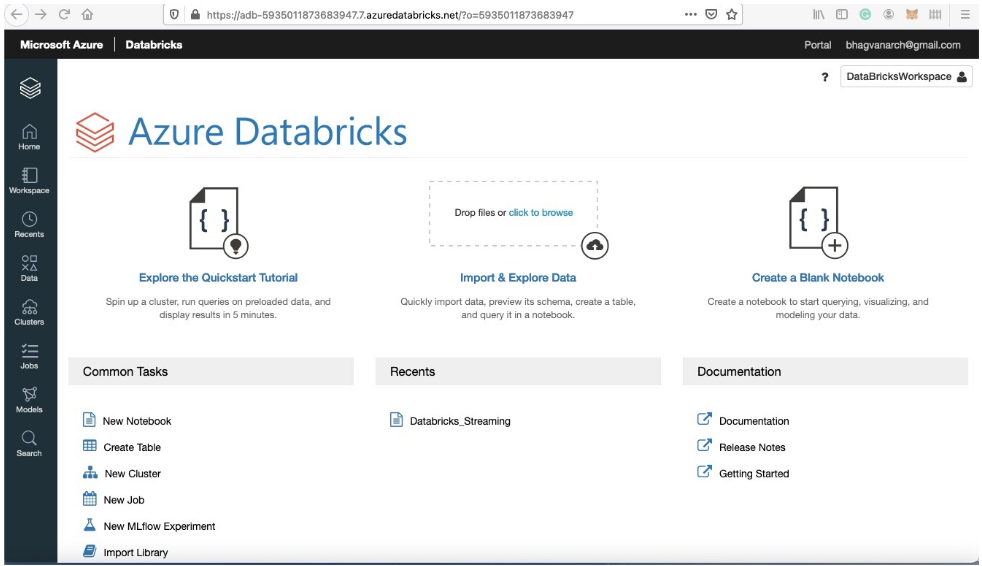

You can see the workspace created for Azure DataBricks ETL.

You can see in the left menu the items for cluster creation and notebook creation.

Now let us create the Spark cluster.

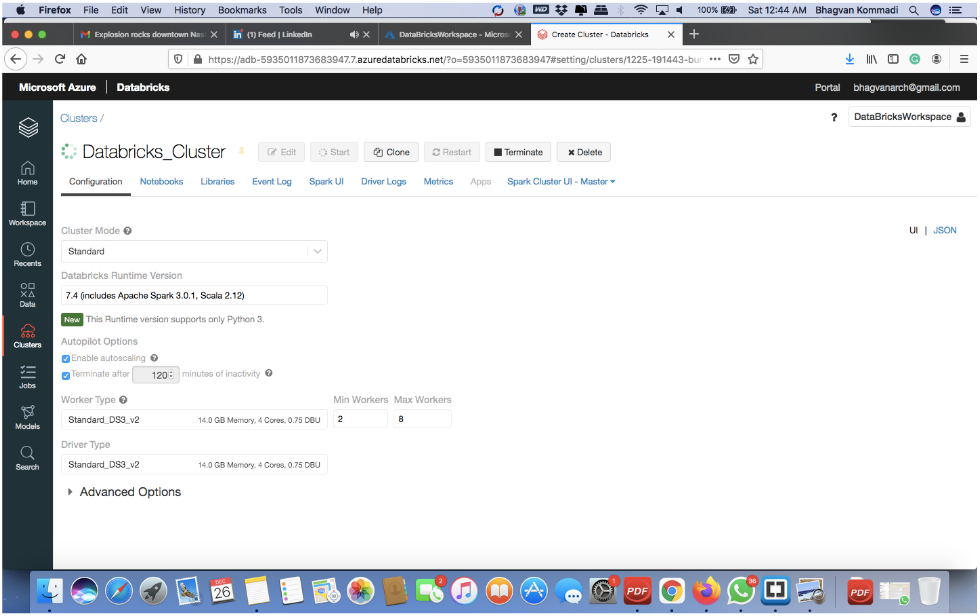

Creation of a Spark cluster

The Apache Spark team created Databricks for use in data analysis, data processing, and data engineering. Another important feature is job API for data management. You can process data using job execution by data scientists, engineers, and analysts. You can execute applications using the job API by using notebooks on the cluster and managing the job execution results with CLI, API, and alerts.

You can use a Databricks cluster for analysis, streaming analytics, ad hoc analytics, and ETL data workflows. The Jupyter Notebook on Databricks contains commands. There are two types of clusters - job clusters and all purpose clusters. You can use all purpose clusters for data analysis using notebooks and job clusters for executing the jobs. Utilize all purpose clusters by using the user interface, command line interface and REST API. Collaboration with other users can be done on different clusters.

Let us first start with Databricks home to get cluster access.

Clusters can be created in the UI. You can use the job cluster for executing jobs and the cluster can be stopped once the job is done. You need to have a job to get started on cluster creation. Permissions need to be granted for creation of jobs and clusters.

You can click on the clusters icon on the sidebar from the Databricks home. Click on create cluster now.

To name the cluster, input its name using the configuration tab. Notebooks can also be added for query and command execution.

Creation of a file system in the Data Lake Storage Gen2 account.

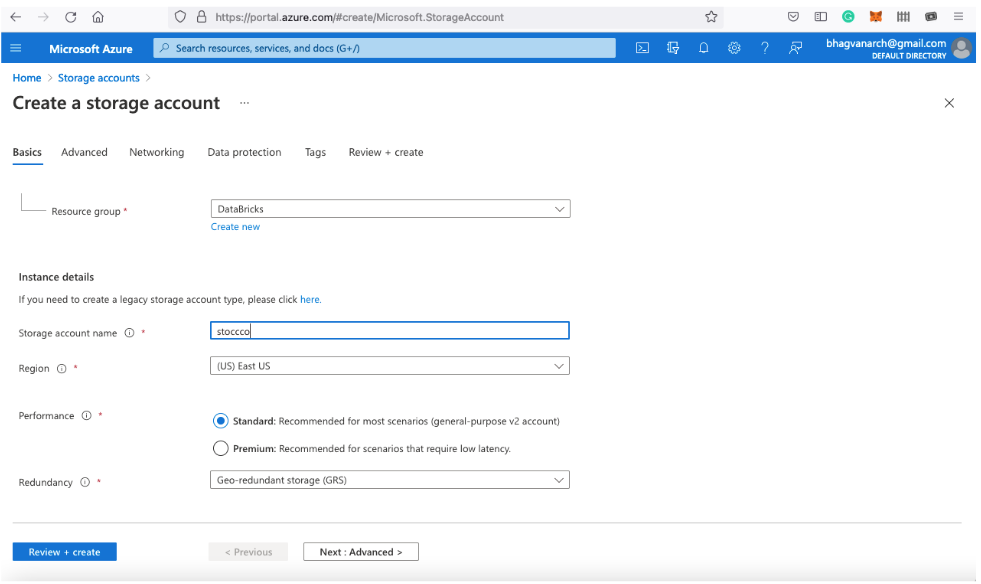

You can create a notebook in Azure Databricks workspace and then execute code snippets to configure the storage account. Let’s start creating a storage account.

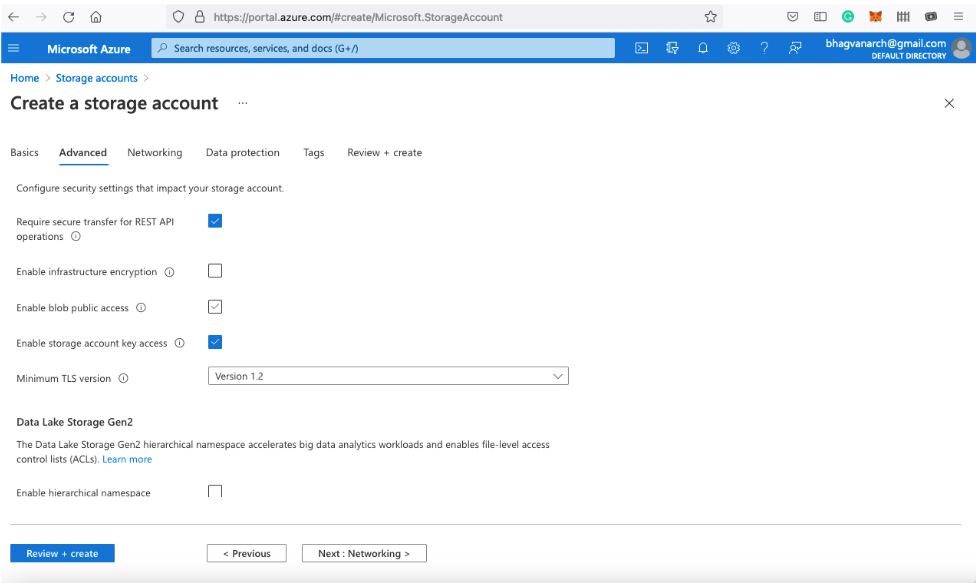

Now name the storage account using the UI and deselect the option “Enable blog public access”.

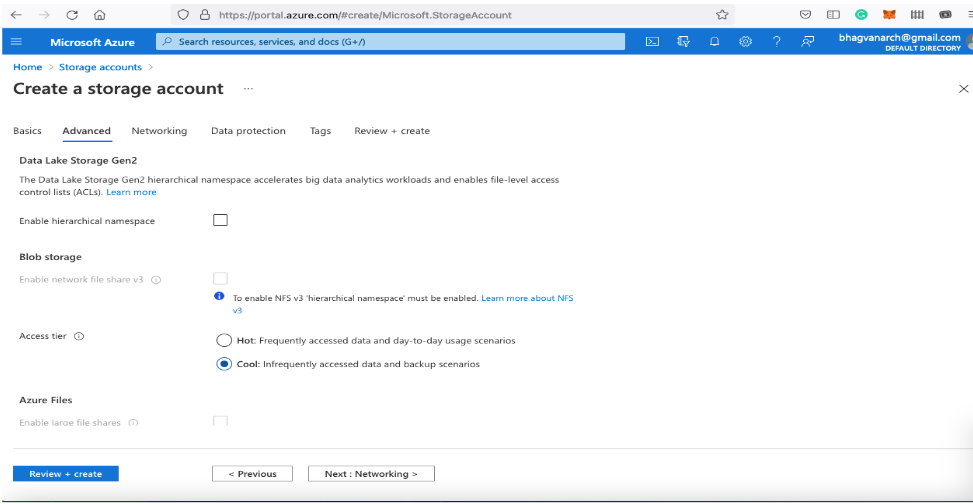

Now select the cool Access Tier as shown below:

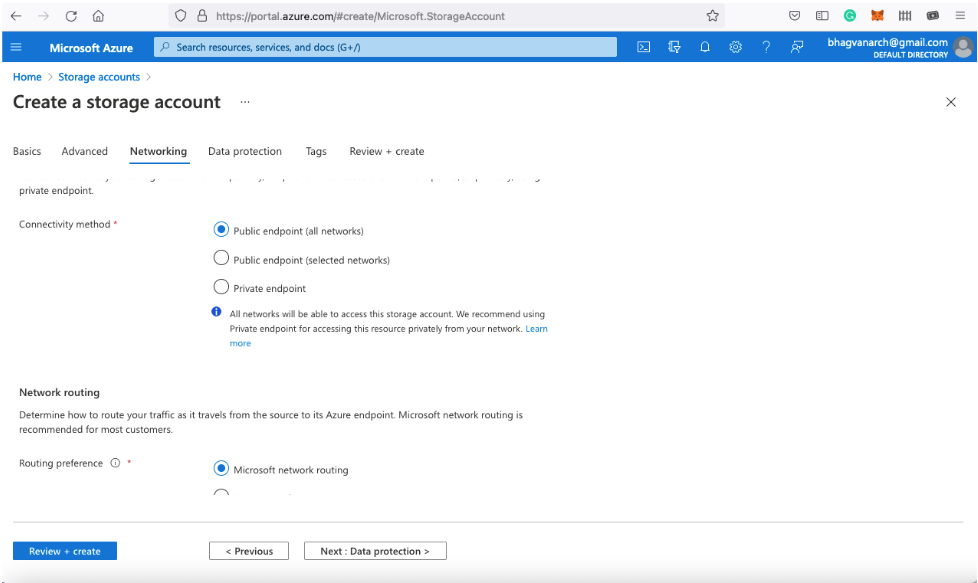

You can now click Next and review the networking options.

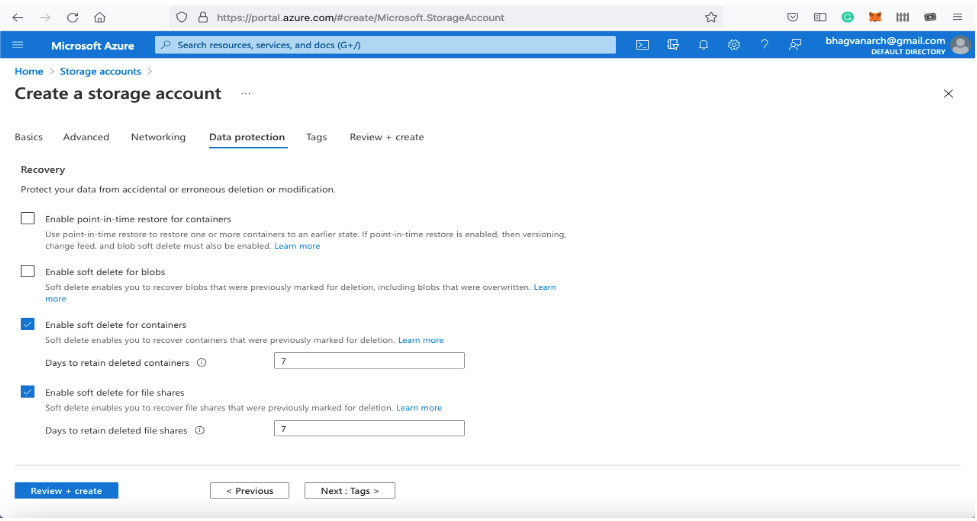

You can click Next and deselect the data partition like “Enable soft delete for blobs”.

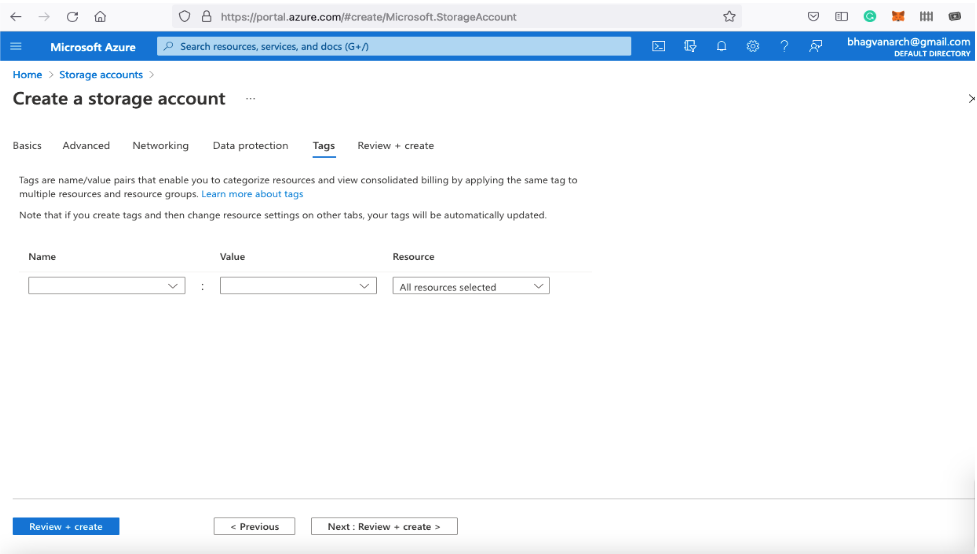

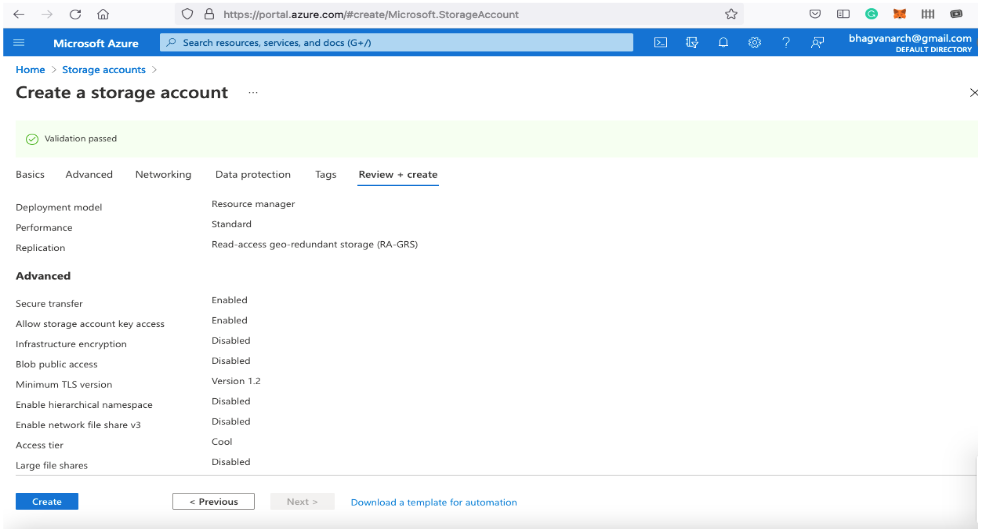

Finally, you can review and finish creating the storage account.

Now verify the configuration before the creation of a storage account.

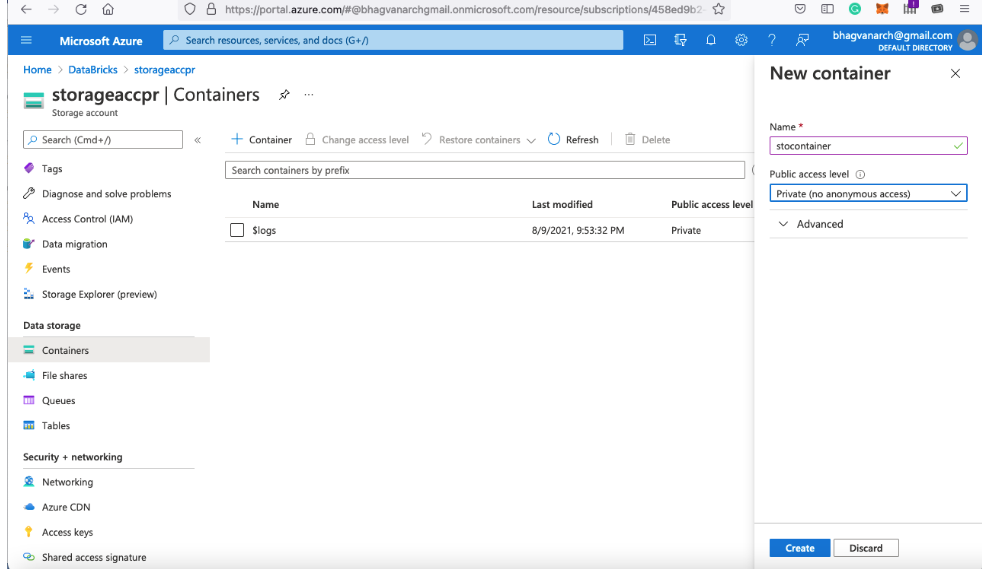

Next, you can create a storage container in the storage account.

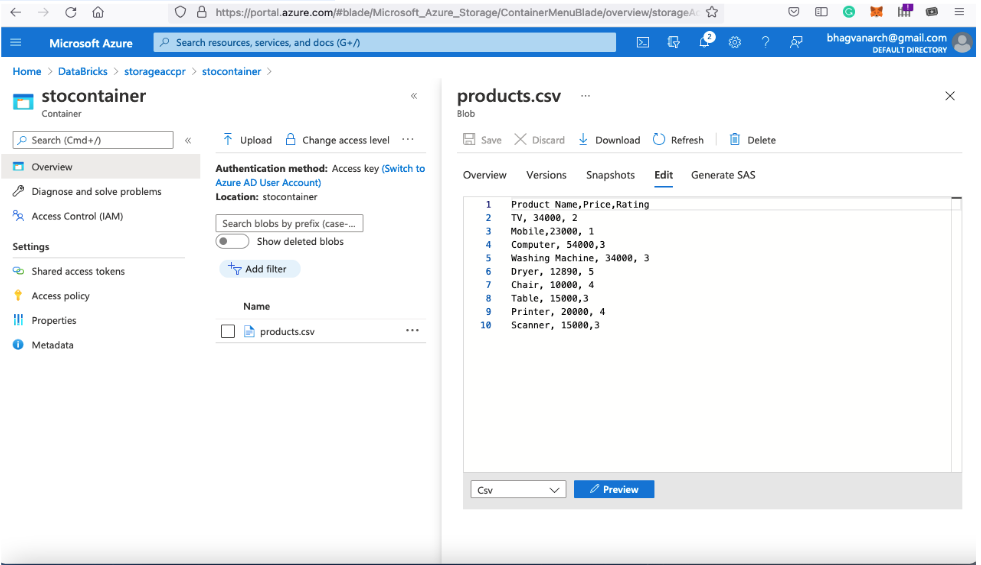

Create a new container to upload a file -“Products.csv”.

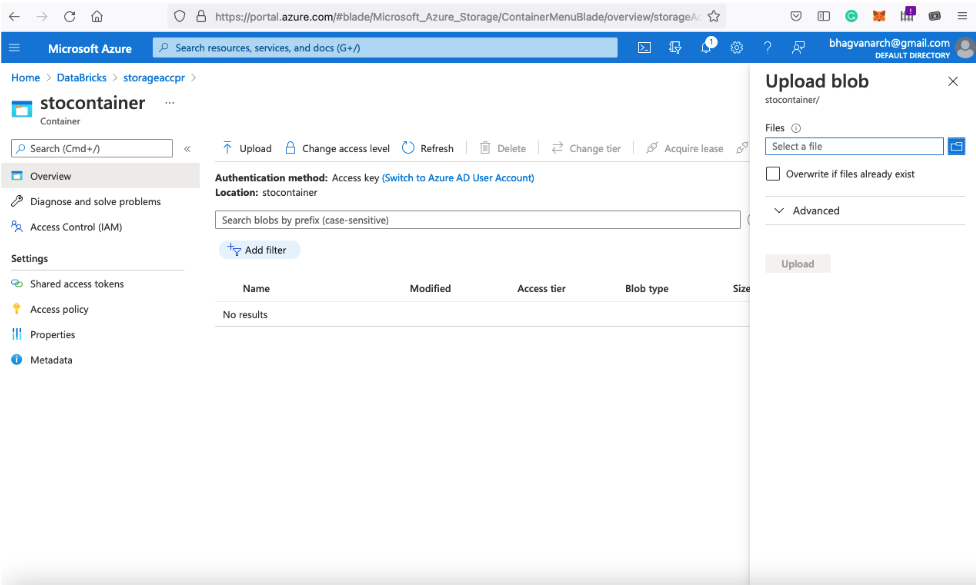

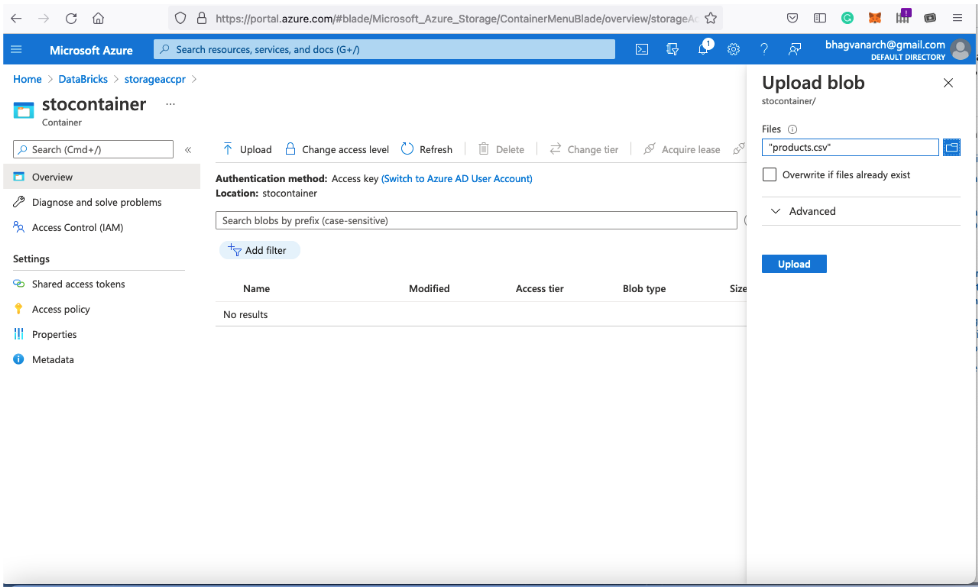

Next, click on the upload button to create a blob in the storage container.

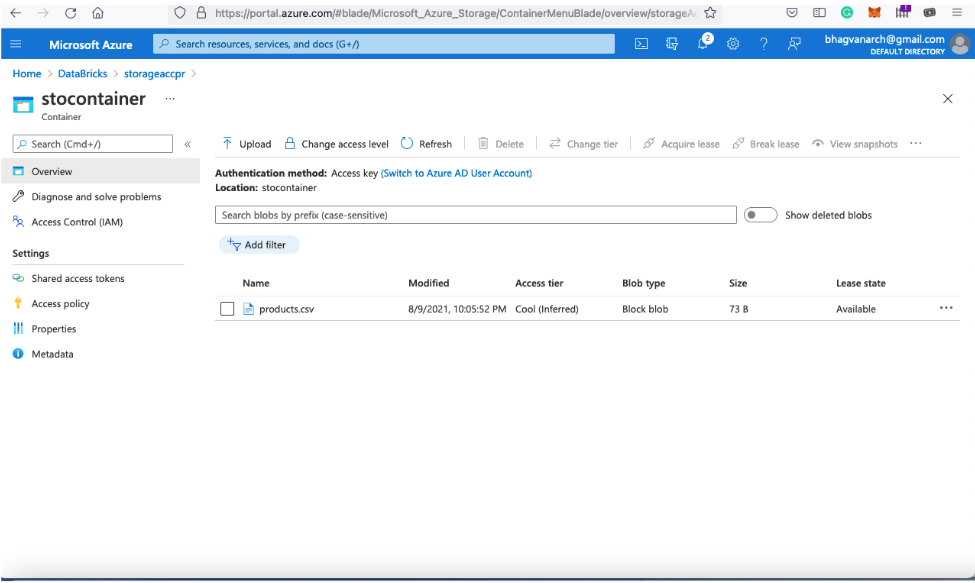

Finally, you can see the created blob in the storage container.

You can also preview the csv file.

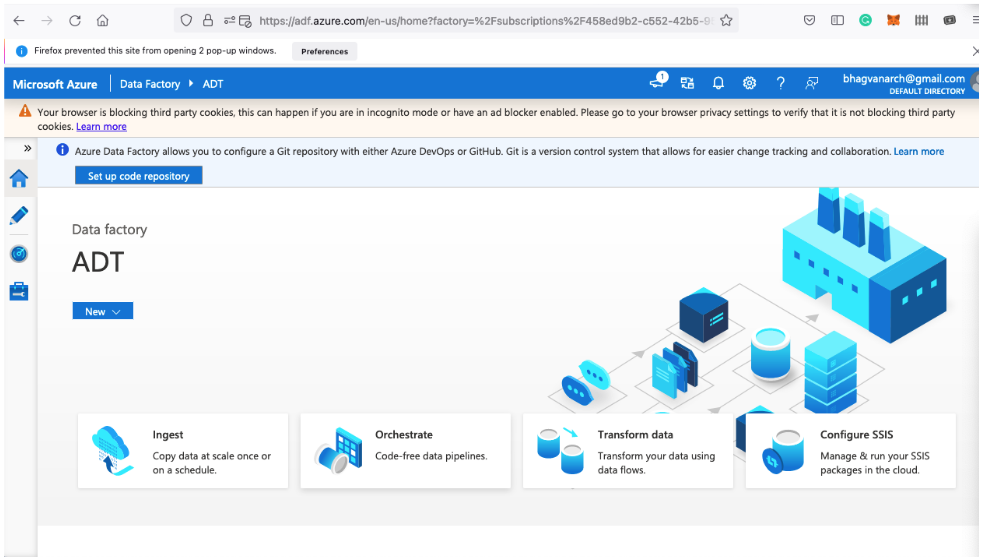

Let us now look at the creation of data flow. You need to navigate to the data factory view.

Creation of Data Flow

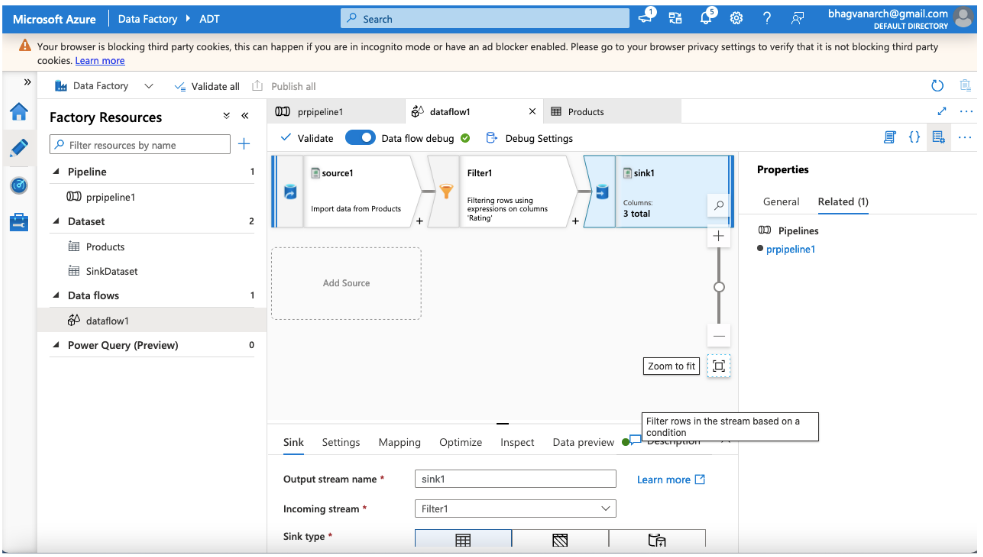

To start with, you can create a data flow using the data factory orchestrate option.

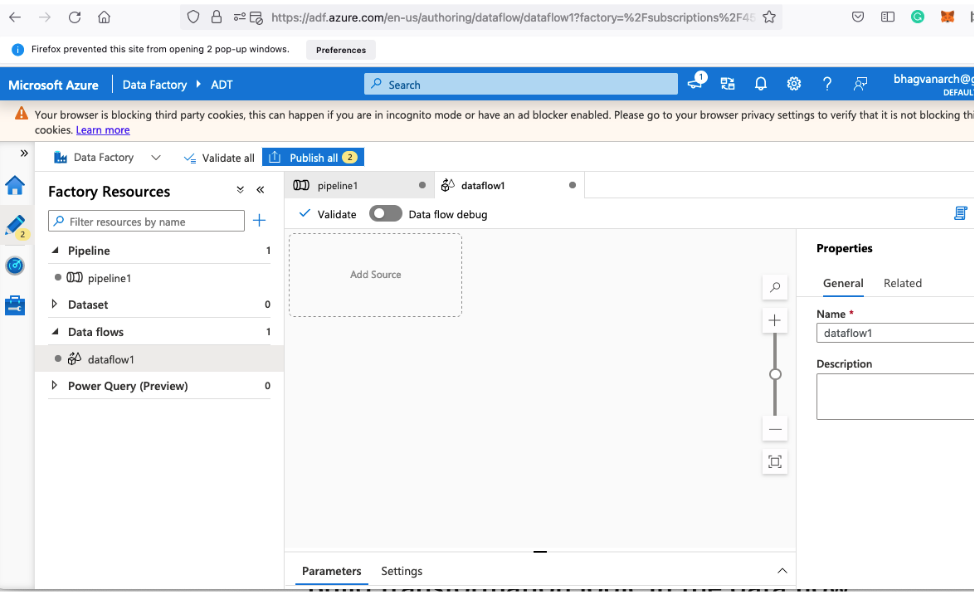

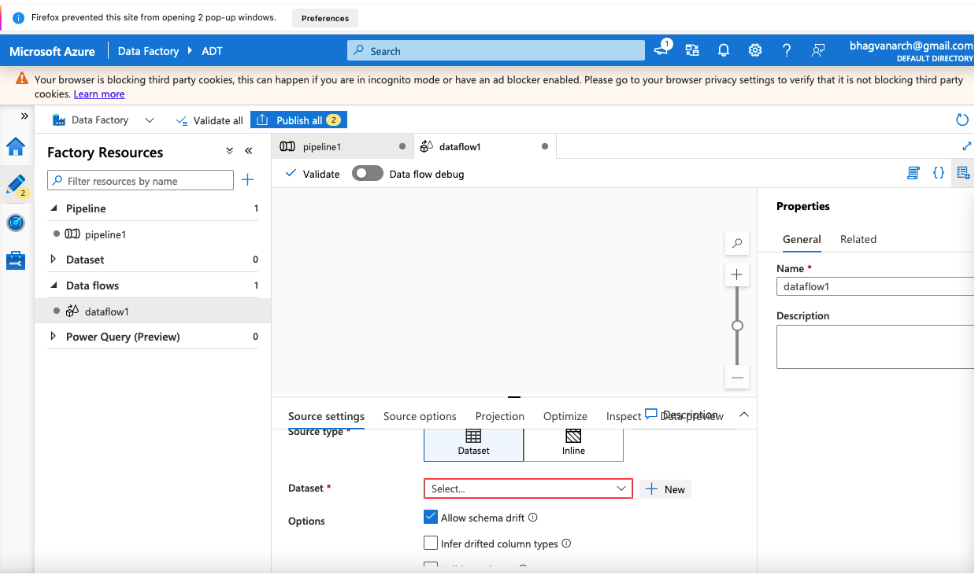

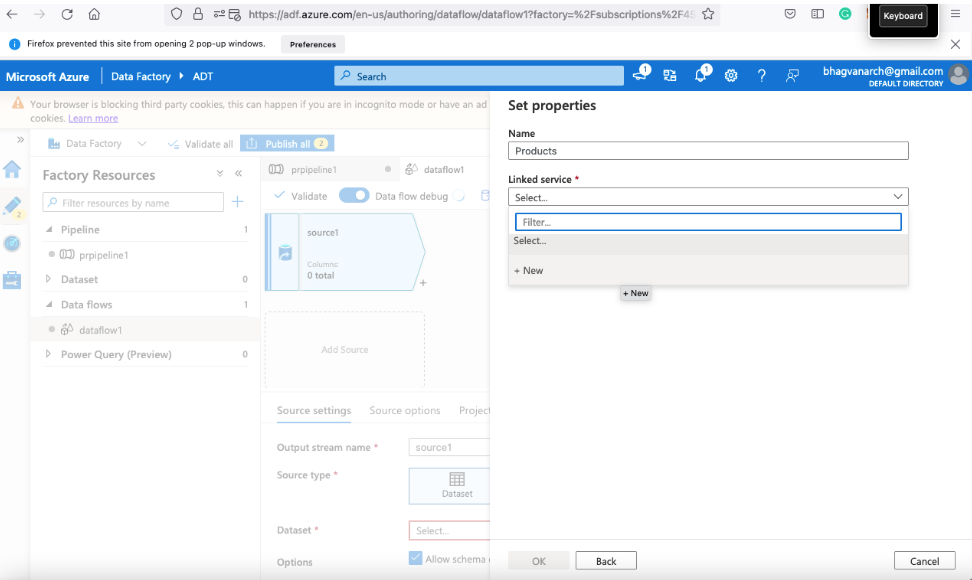

You can name the data flow by typing the name. Create a new dataset by clicking on Add Source.

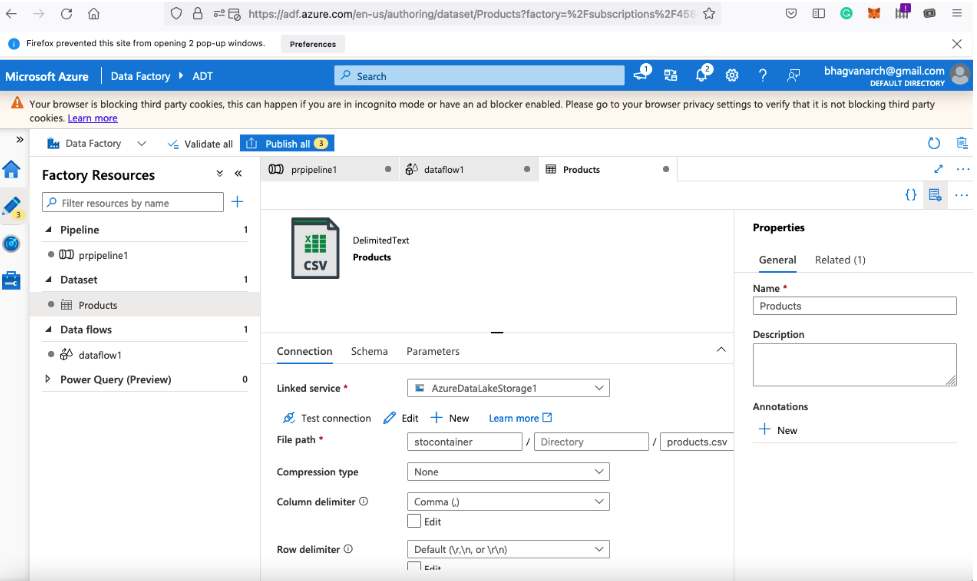

Next, you can name the dataset by typing the name. You can create a new linked service as displayed in the snippet below:

Creation of Service Principal

You can create a service principal which provides access to the resources required. You can assign a role to the service principal which can be at the resource level, resource group, or subscription level. The service principal can be created by using Azure portal or Power Shell. You need access to Azure Active Directory to register an application. After creation of the application, the role is assigned to the service principal based on this role.

Gathering sample data and uploading it to the Azure Data Lake Storage Gen2 account.

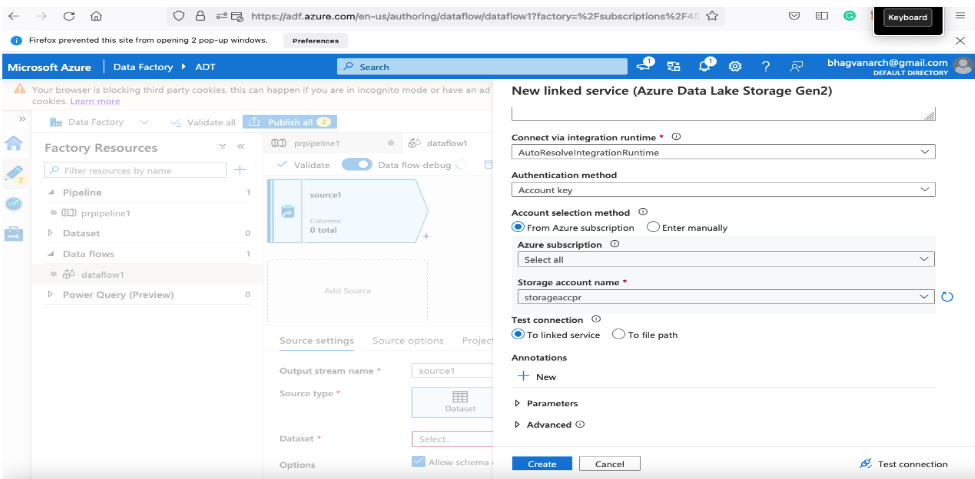

Next, you can select the service type - Azure Data Lake Storage Gen2 option and dataset - CSV option.

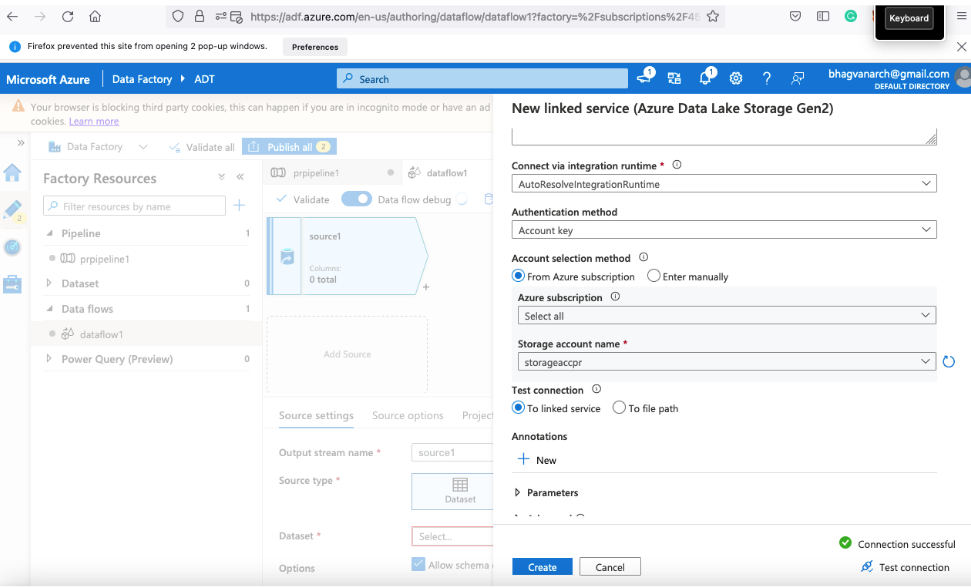

Next, you can test the connection after selecting the subscription type.

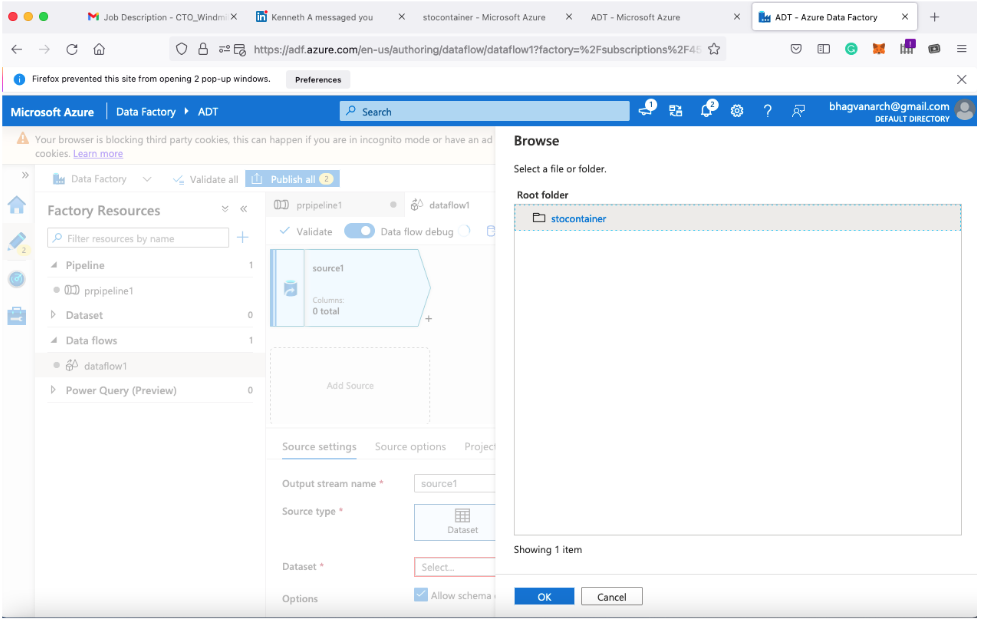

You can check if the testing was successful, then look at the storage container and the blob for the dataset.

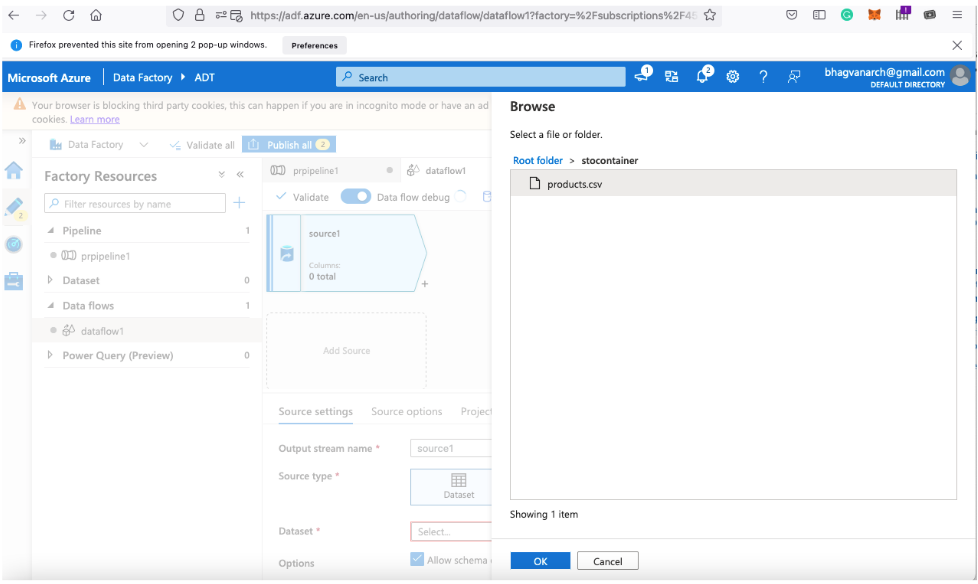

Next pick the file products.csv as shown in the screenshot below:

Name the dataset by typing the name.

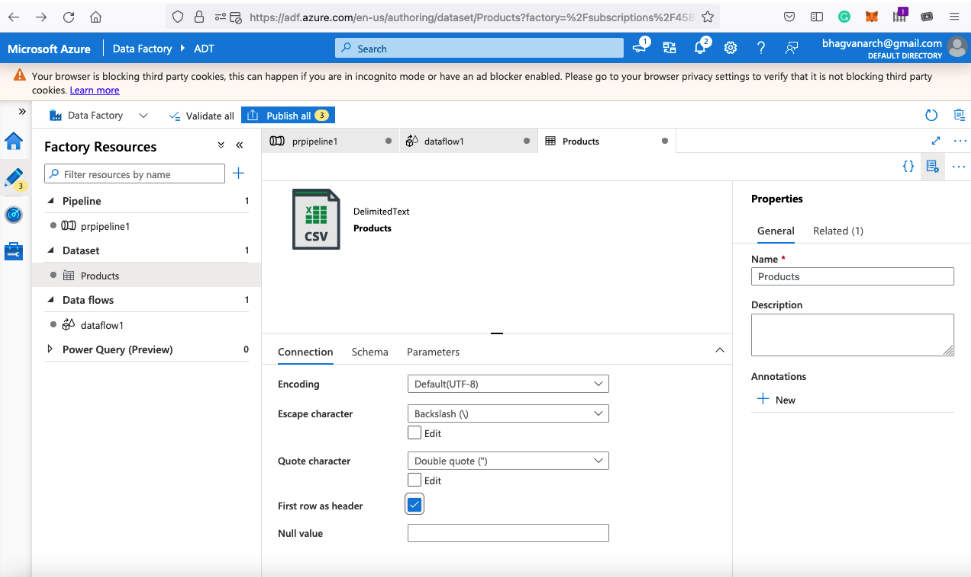

Now check “First row as header” as shown in the screenshot below.

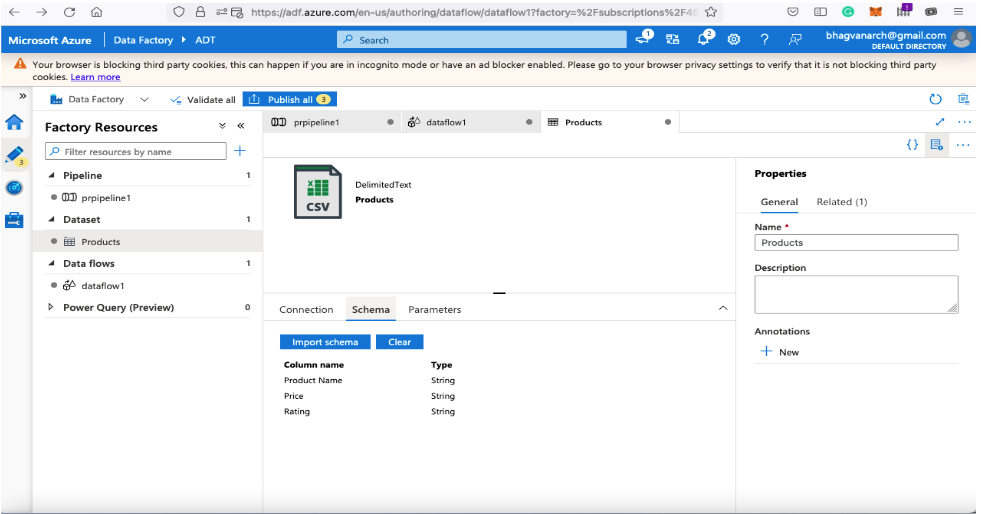

You can now browse the file schema as shown in the image below:

Extraction of data from the Azure Data Lake Storage Gen2 account

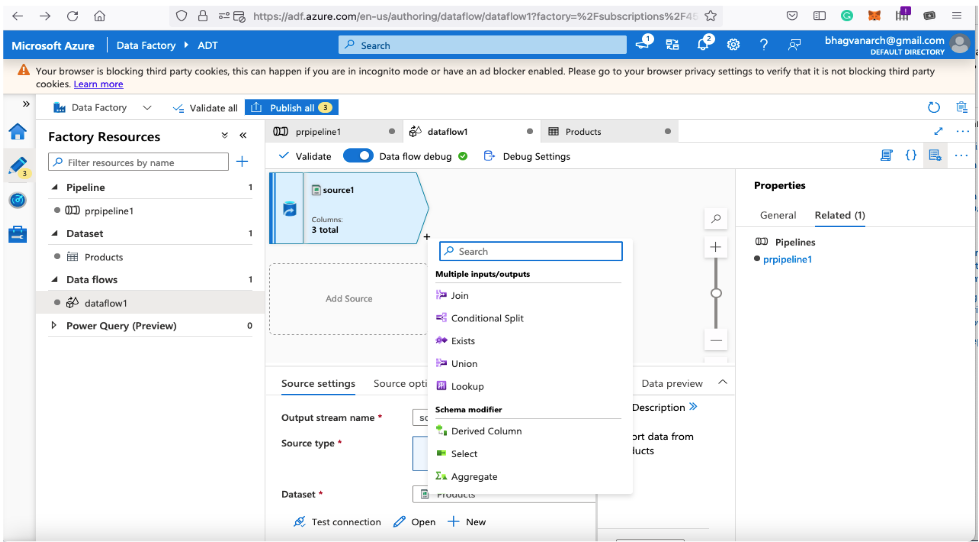

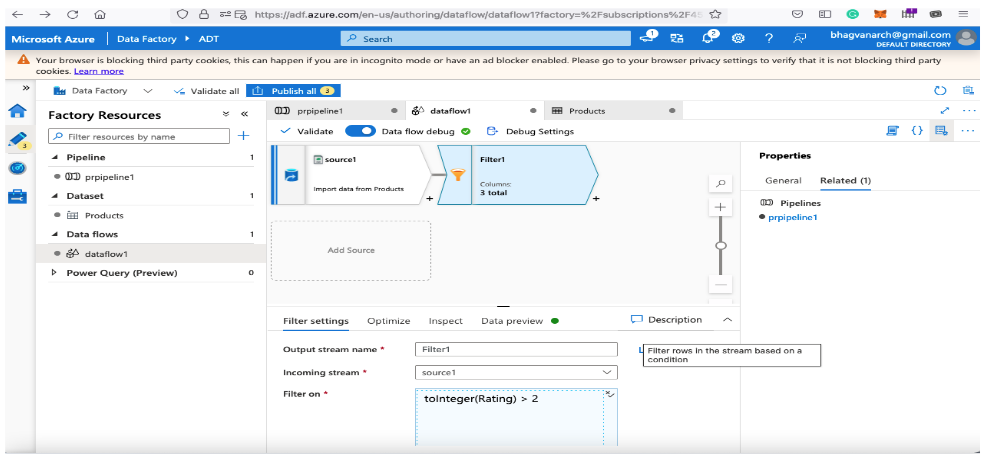

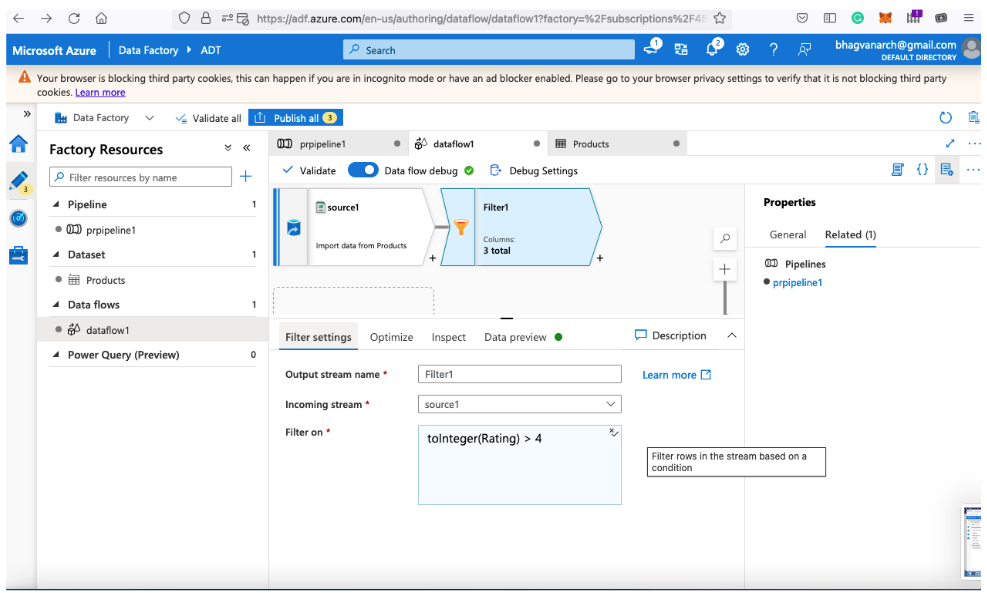

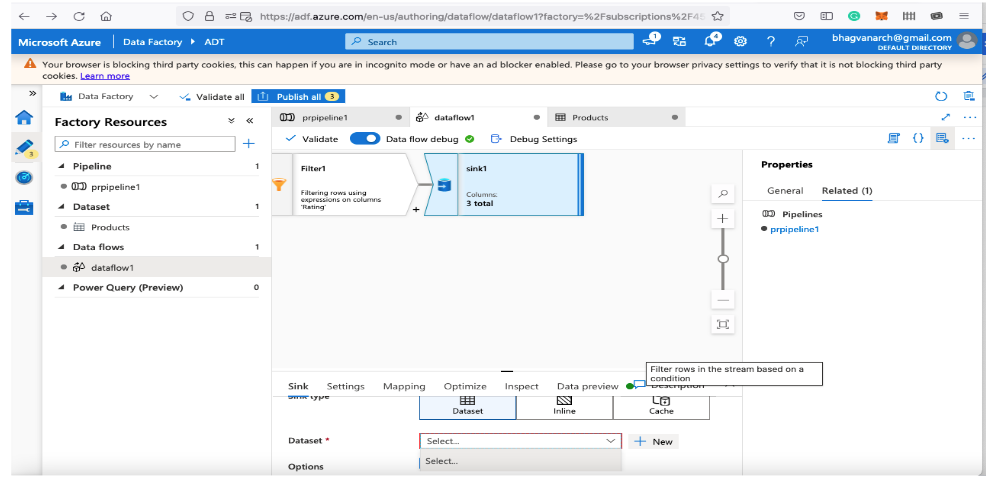

Next, you can add a filter to the data flow as shown in the picture below:

Transformation of data in Azure Databricks

Type in the boolean expression - “products having rating greater than 2”.

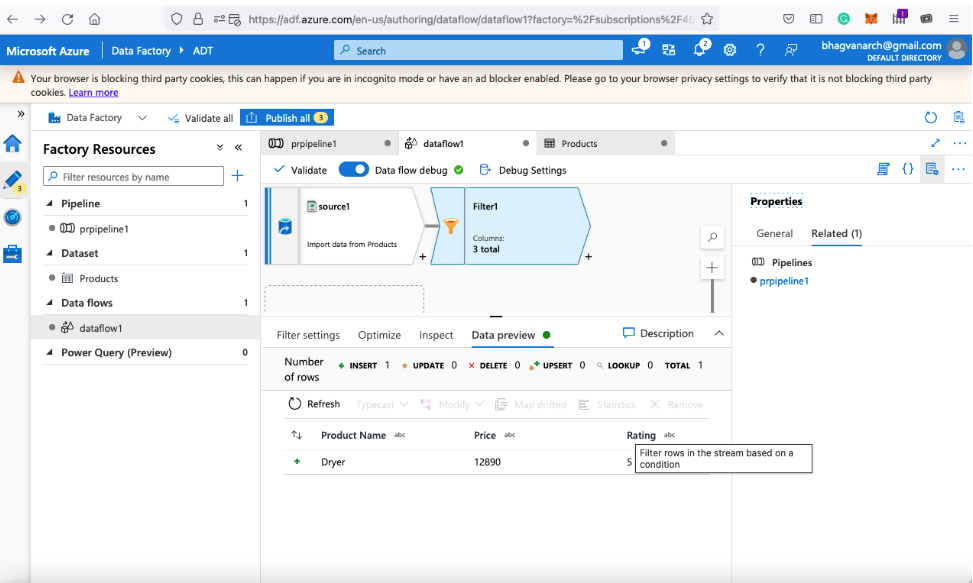

You can preview the data after filtering as shown in the screenshot below:

Loading of data into Azure Synapse

Now you can create a sink to load the data into the Azure Synapse. Create a pipeline and see the properties.

You can create a sink with a new dataset by clicking on the New button.

Sink can be Azure Synapse and the connector can be used to upload the data into Synapse.

The connector used for Azure Synapse saves the data in Azure blob first and then Azure Synapse.

In the next part of the series, we will look at working with Azure Databricks Notebook.

References:

Azure DataBricks Platform: https://docs.microsoft.com/en-us/azure/databricks/