October 5, 2021

Importing CSV files into BigQuery

DURATION

15mincategories

Tags

share

Hello there. Today we will be talking about importing CSV files into Google BigQuery. Google BigQuery is a serverless data warehouse created by Google in 2011. It is a cloud-based analytics data warehouse that can analyze billions of rows of data in minutes. This is advantageous because a significant quantity of data can be analyzed without any overhead cost for infrastructure that would normally accompany such capabilities. All of that is managed in the cloud. BigQuery also has provisions for machine learning.

BigQuery has a built-in query engine that provides the opportunity to use SQL to query data. BigQuery aso has some default datasets that are made available for free. In this article we will be exploring importing CSV Excel files into BigQuery. This is important because, although there are existing datasets on BigQuery, sometimes there may be a need to analyze data during the lifecycle of a project that isn’t already in the warehouse. This article will walk you through how to do that.

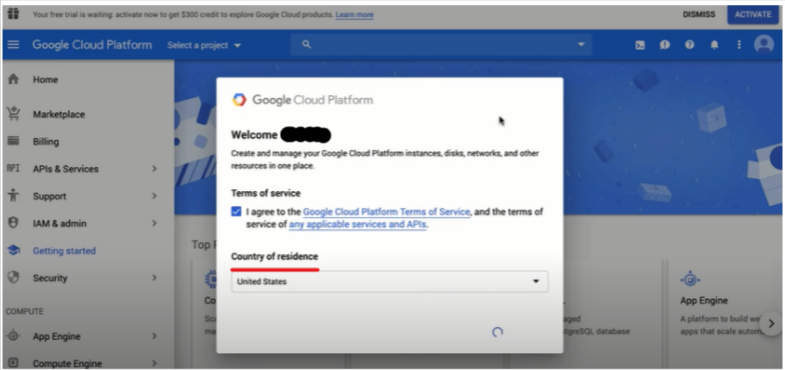

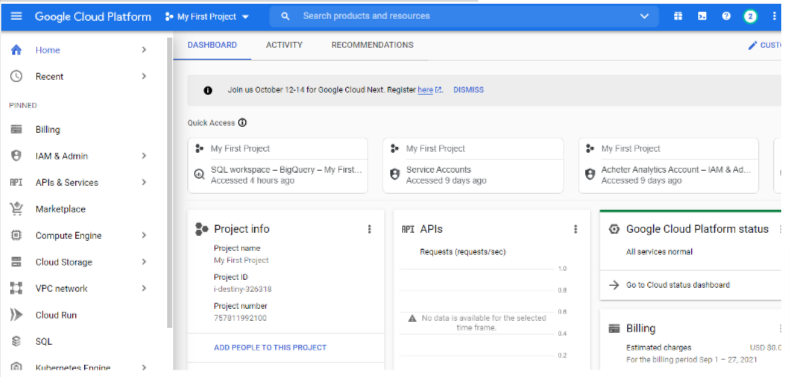

The first thing to do is to go to console.cloud.google.com/getting-started on your browser or the BigQuery Google search. It doesn’t matter if you don’t have an account, all you need is a gmail account/address. The link will take you to a landing page that looks like this:

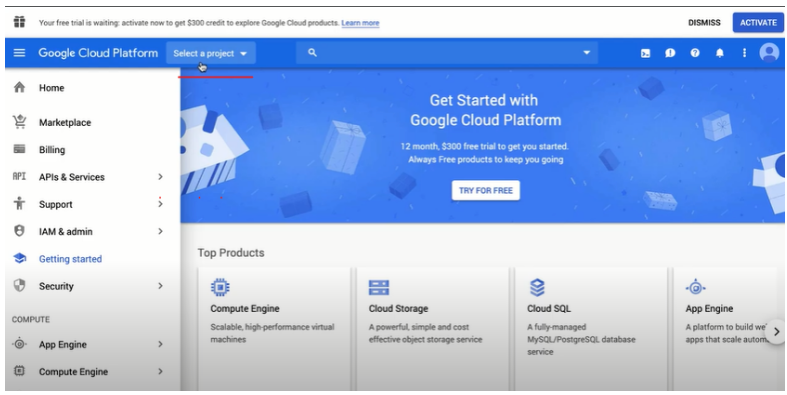

There is also the option to pick or choose your country of residence, highlighted in red. After agreeing to the terms of service BigQuery will take you to another landing page, one where you can have a look at your console. There are options in the menu on the left hand-side of the image below. It is important to first select a project, this is highlighted in red. A project on BigQuery will contain (in our case) all the SQL code and sample data that is relevant for this project.

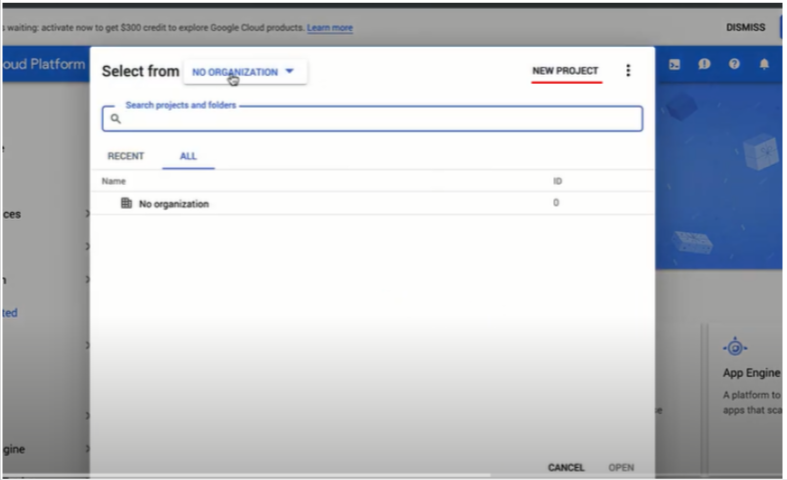

Selecting a project will open up another window where you can click on “NEW PROJECT” highlighted in red.

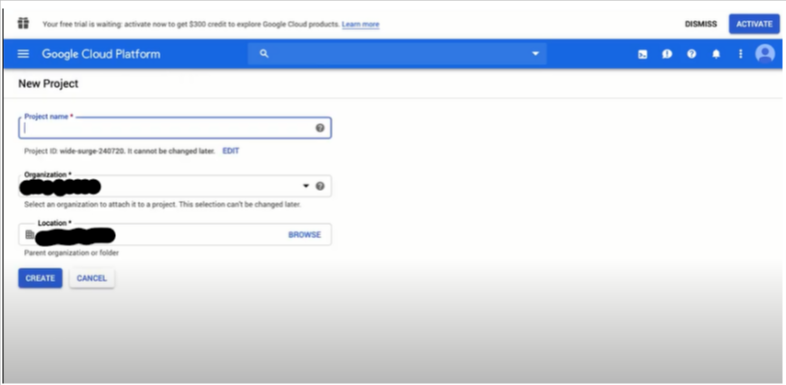

Creating a new project will provide you with the option to name the project. After that, just click on the CREATE button like in the image below.

The image below is where a host of services on BigQuery can be accessed from, take note of the left hand-side of the image below.

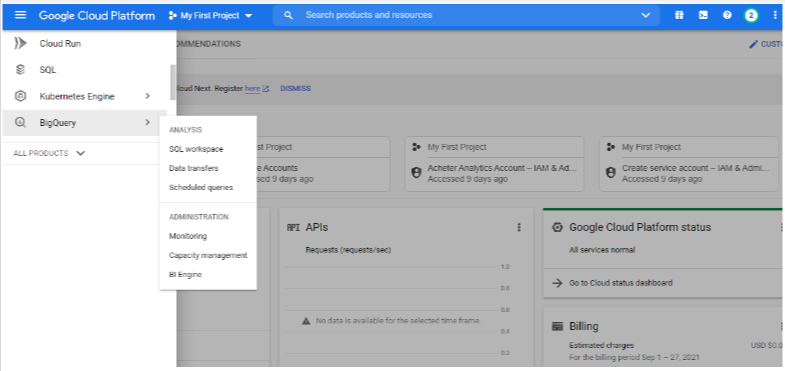

To access BigQuery, scroll down the menu of services offered on the left-hand side and look for the “BigQuery” option then click on it.

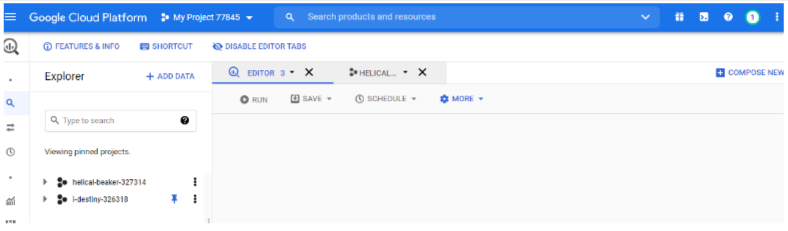

This should take you to the BigQuery IDE where SQL queries can be typed out and executed. Like in the image below, the IDE allows for standard Postgresql and MySQL-type queries.

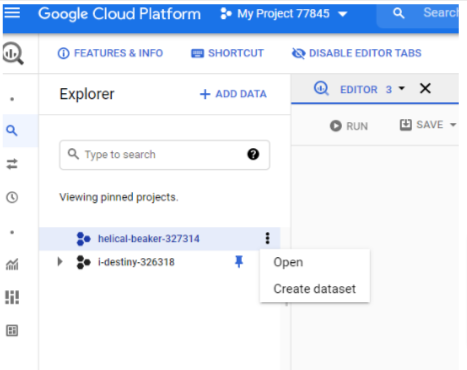

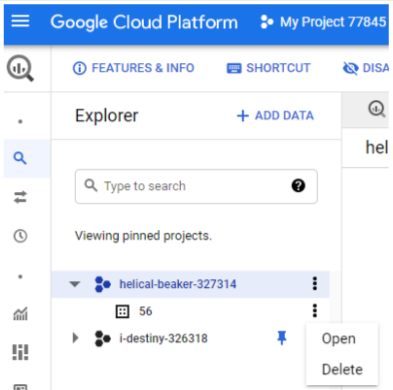

The next thing is to upload the CSV file. To do that, click on the three black buttons beside any of the pinned projects like in the image below. An option should appear to “Create Dataset”.

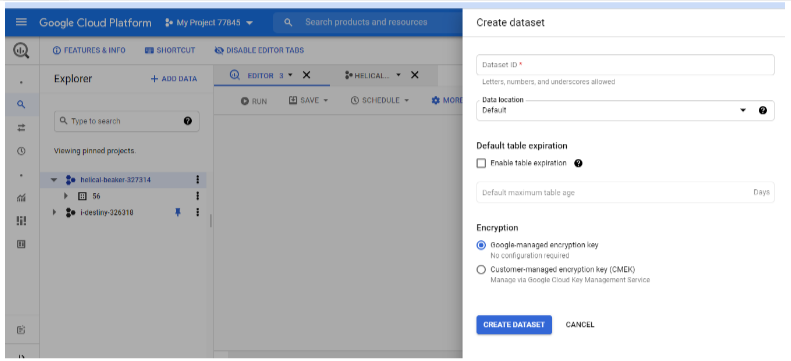

The “Create dataset” option will open up a page on the right-hand side of the screen.

Here you can initiate the dataset to be created. There is an option, Default table expiration, to determine how long a table should last. You can fill in the Dataset ID with letters, numbers or underscores. I’ve named the dataset for this article 56.

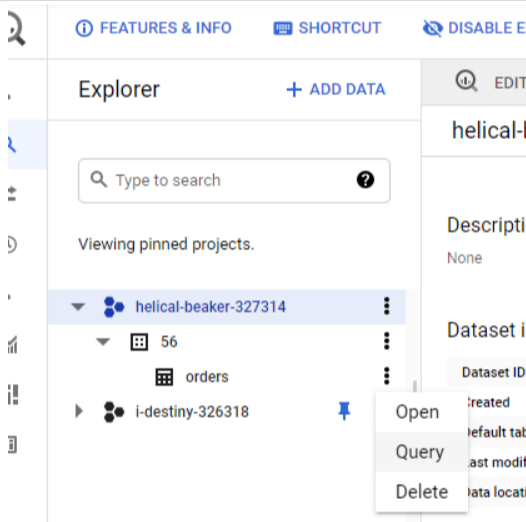

Click on the three dots beside the 56 and click on the option “Open”.

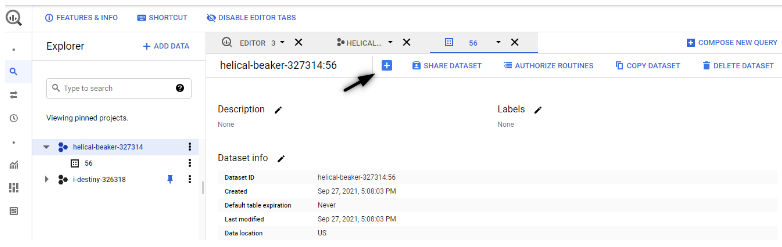

Opening the dataset just created should take you to a view where the details of the dataset 56 are displayed. The arrow on the image below points to an icon that, when clicked on, creates a table within the dataset 56.

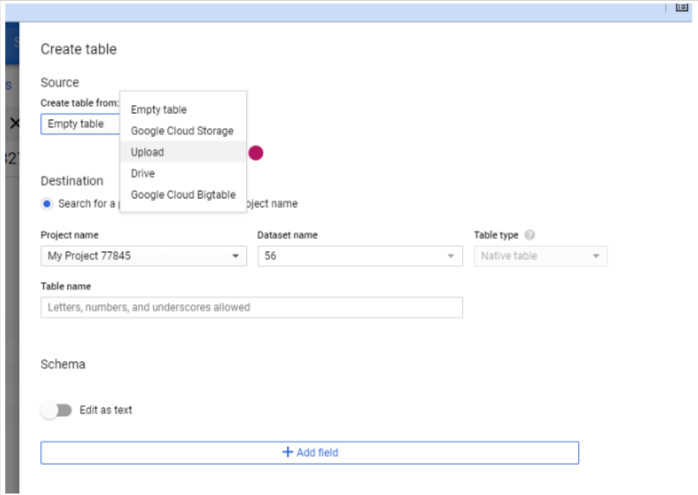

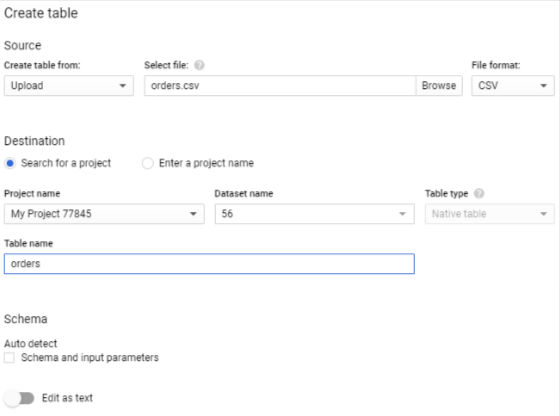

A window pops up that shows the different sources that can be used to collect data from create a table. The “Upload” option highlighted in purple is the option we will be using for the purposes of this article.

There is an option to select a CSV file from your laptop or desktop. An option for the file type is also specified in the File format section.

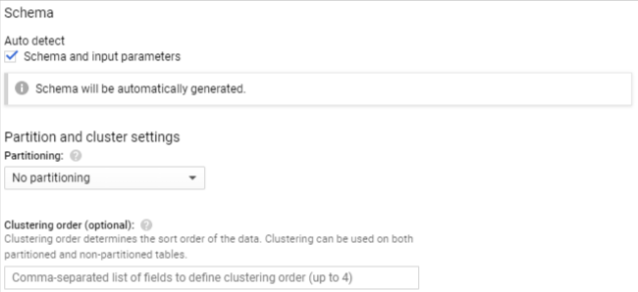

It is important to tick the shema option when importing files into a table like in the image below. The schema helps describe the data being loaded.

Once the data is in BigQuery you can view it by clicking on the “Query” option in the image below.

And voila! You’ve just imported a CSV file into BigQuery.