October 18, 2021

Loading CSV files to MySQL database via REST API and Sequelize models

DURATION

12mincategories

Tags

share

Sometimes when a large number of rows are to be added to a database, instead of adding values one by one, websites provide the option to upload a CSV (comma-separated values) file. Data is then able to be parsed and saved to the database.

In this article I am going to use MYSQL, along with sequelize ORM, to create a project with an attached database. You can use whatever database paradigm you prefer. Once our database and the API to create data are done, we will create some sort of functionality that will convert the CSV file to JSON. The important thing here is that we are not going to store the file anywhere on the system/server (other tutorials available online first need you to upload files to the server which does not scale well). This means that no excess space will be needed to store the file.

Let’s begin:

Setting up the project and database:

Create an empty folder and run the command git init inside of it using your terminal. This will give you a package.json file.

Now install some libraries that will be needed by typing the following:

npm i express dotenv sequelize sequelize-cli mysql2

Create a file called app.js in your project’s root folder. And write the following code in the file.

1

2

3

4

5

6

7

8

9

10

11

12

const express = require('express')

const app = express();

const PORT = 3000;

//Following lines are to make sure our app can parse the json data

app.use(express.json());

app.use(express.urlencoded({

extended: false

}));

app.listen(PORT, () => {

console.log('Server started on port 3000');

})

Here we are importing the express module and initializing it with an app variable. After doing that we started the server on port 3000.

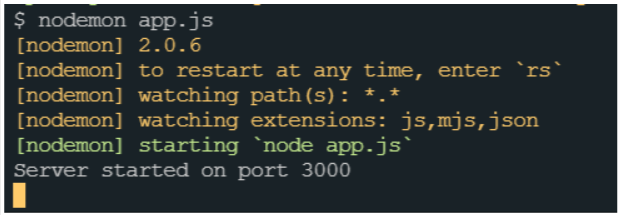

And now run nodemon app.js

If you see a message that the server started on port 3000, then you did everything right.

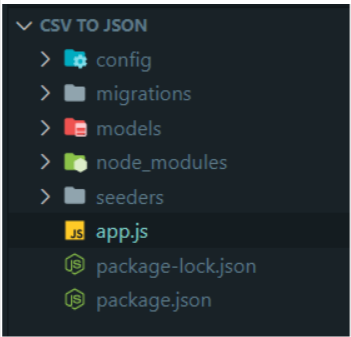

Now run sequelize init in terminal to get started with sequelize. Your folder should be looking like this now.

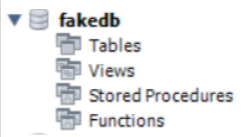

Now we will be connecting to our database. Open your MySQL workbench and create a database. I have called mine fakedb and, as you can see in the image below, it is empty for now.

Open the config/config.json file; there will be a key called development, like this. Now we have to replace the above values with correct values. In my case it looks as follows: you will have to change the values according to your SQL setup, but the host and dialect will be the same as mine.

1 2 3 4 5 6 7 8 9{ "development": { "username": "root", "password": null, "database": "database_development", "host": "127.0.0.1", "dialect": "mysql" }, }

Go ahead and delete the seeders and migrations folder, we don’t need that. In the models’ folder create a file called books.js as we will be working with books’ data. Our table will have an auto-incrementing id and the book title. Paste the following code in books.js:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

module.exports = (sequelize, DataTypes) => {

const Book = sequelize.define("Book", {

id: {

type: DataTypes.INTEGER,

autoIncrement: true,

primaryKey: true

},

title: {

type: DataTypes.STRING,

},

author: {

type: DataTypes.STRING,

}

});

return Book;

};

Add the following line below the import lines in app.js.

const db = require('./models');

This will allow us to use the models in the app.js file and now wrap the app.listen in the following way.

1

2

3

4

5

6

7

8

9

db.sequelize.sync()

.then((result) => {

app.listen(3000, () => {

console.log('Server started');

})

})

.catch((err) => {

console.log(err);

})

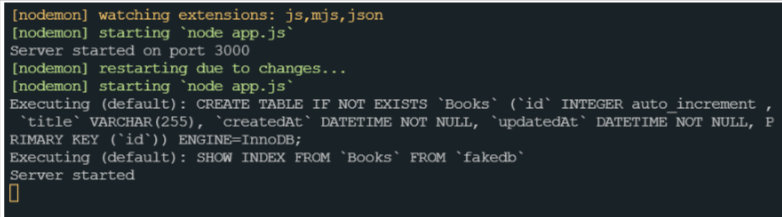

If your server is running you will be able to see the following query in your terminal.

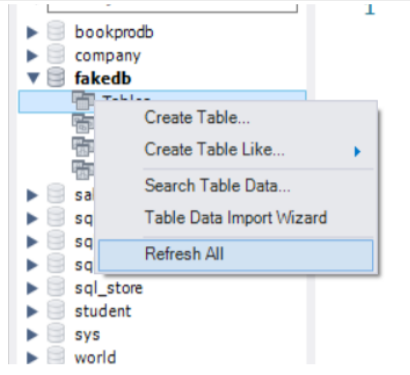

Go to the MySQL workbench and refresh by right-clicking on tables.

Now let’s create an API to add bulk data in the books table. In the app.js file add the following API snippet.

1 2 3 4 5 6 7 8 9 10app.post('/books', async (req, res) => { const data = req.body; console.log(data); try { const books = await db.Book.bulkCreate(data); res.send(books); } catch (err) { res.send(err.message); } })

You have to make a POST request to http://localhost:3000/books with the following data in the body to check whether your API is working or not. Remember, we are using bulkCreate because the body is an array of JSON objects. Following is an example of the body.

1 2 3 4 5 6[ { "title": "The final empire", "author": "Brandon Sanderson" } ]

Making the request will show you that the API is working.

Let’s now create functionality to process the CSV. First we need to create a file with sample CSV data. Create a CSV file, open it using notepad, and paste the following in the file.

Make sure to follow the below format.

title,author

3 body problem,Cixin Liu

Elantris,Brandon Sanderson

Our Mathematical Universe,Max Tegmark

Now, we need to configure our server to receive files. Install the file-upload package using npm.

npm i express-fileupload

Add the following snippet in the import section of your app.js.

1 2var fileUpload = require('express-fileupload'); app.use(fileUpload());

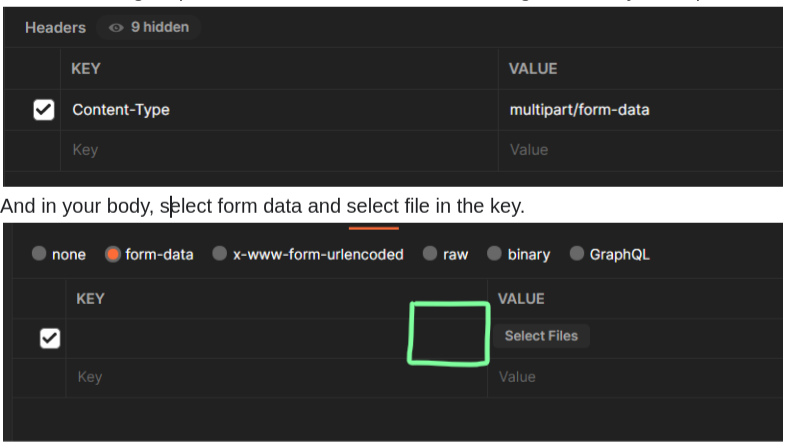

Now, let’s configure postman to send files. Add the following header to your request.

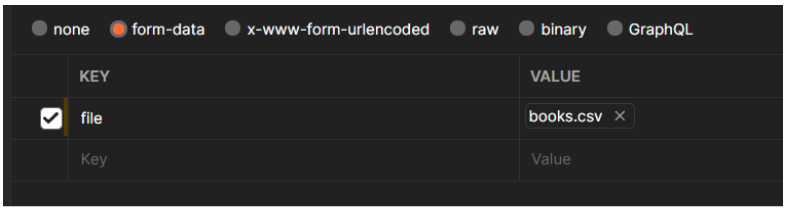

If you hover your cursor in the highlighted area after selecting form data then you will be able to select the file option. Add a key in the key column and select the CSV file. This is how I did it.

Paste the following API in the app.js file.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

app.post('/books/file', (req, res) => {

if (!req.files || !req.files.file) {

res.status(404)

.send('File not found');

} else if (req.files.file.mimetype === 'text/csv') {

res.send('file recieved');

} else {

res.status(409)

.send(

util.apiResponse(0, toast.INVALID_FILE_FORMAT, {

err: 'File format is not valid',

}),

);

}

})

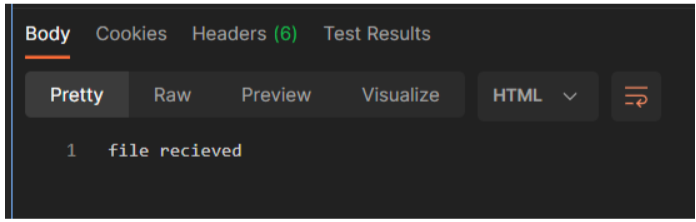

The files will be available in req.files key. In the first if condition we are checking whether the file is available or not in req.files and whether the req.files contain any key called file, which is the same key we sent through postman. We are also checking whether the file we are getting is a CSV or not. On running this and sending the request we get the following result in postman:

Since our file is getting received, let’s see what we are getting on the server. Modify your API like this:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

app.post('/books/file', (req, res) => {

if (!req.files || !req.files.file) {

res.status(404)

.send('File not found');

} else if (req.files.file.mimetype === 'text/csv') {

res.send(req.files.file);

} else {

res.status(409)

.send(

util.apiResponse(0, toast.INVALID_FILE_FORMAT, {

err: 'File format is not valid',

}),

);

}

})

This is where it will get weird, this is the response you will get on the server.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

{

"name": "books.csv",

"data": {

"type": "Buffer",

"data": [

116,

105,

116,

32,

.

.

.

.

.

.

84,

107

]

},

"size": 105,

"encoding": "7bit",

"tempFilePath": "",

"truncated": false,

"mimetype": "text/csv",

"md5": "e3117de8adf35abe94b9a5ece8309eef"

}

I’ve replaced a few lines in the response with dots because the response was very big, but as you can see it is getting converted in some gibberish numbers. Let’s try and handle this. We will convert this back to UTF-8, currently, it’s in HEX. Converting HEX to UTF-8 is simple in Javascript.

1

2

3

4

5

6

7

8

const convert = function (csvFile) {

const convert = (from, to) => (str) => Buffer.from(str, from)

.toString(to);

const hexToUtf8 = convert('hex', 'utf8');

let csvData = hexToUtf8(csvFile.data)

.split('\r\n');

console.log(csvData);

}

The above function is taking a csvFile in parameter (we will pass it req.files.file). Then we have created a function called hexToUtf8 using inbuilt Javascript features to convert HEX to UTF-8 and we are passing our data from our CSV file. Call this function from within your API like this by modifying the second if condition as such.

1 2 3 4 5else if (req.files.file.mimetype === 'text/csv') { let csvFile = req.files.file; convert(csvFile); res.send(req.files.file); }

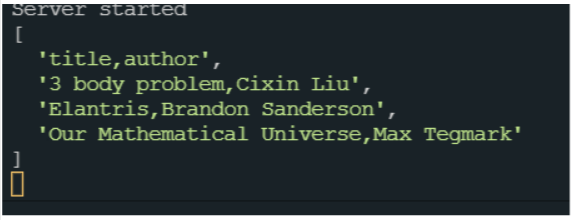

Hit the request again, the postman will still show the same output but in your console you will see this.

Nice, we have got the data. Now it’s just a matter of converting it into an array of key-value pairs. Our keys are the first row in the sent CSV file, which is why they are the first element here in our array.

Now modify the convert function like this.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

const convert = function (csvFile) {

const convert = (from, to) => (str) => Buffer.from(str, from)

.toString(to);

const hexToUtf8 = convert('hex', 'utf8');

let csvData = hexToUtf8(csvFile.data)

.split('\r\n');

console.log(csvData);

let csvRows = [];

csvData.forEach((data) => {

csvRows.push(data.split(','));

});

let data = [];

for (let i = 1; i < csvRows.length; ++i) {

let dict = {};

for (let j = 0; j < csvRows[i].length; ++j) {

dict[csvRows[0][j]] = csvRows[i][j];

}

data.push(dict);

}

return data;

}

And modify your API like this:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

app.post('/books/file', (req, res) => {

if (!req.files || !req.files.file) {

res.status(404)

.send('File not found');

} else if (req.files.file.mimetype === 'text/csv') {

let csvFile = req.files.file;

data = convert(csvFile);

res.send(data);

} else {

res.status(422)

.send(

util.apiResponse(0, toast.INVALID_FILE_FORMAT, {

err: 'File format is not valid',

}),

);

}

})

On doing everything right this is what you will see in postman on making the request.

1 2 3 4 5 6 7 8 9 10 11 12 13 14[ { "title": "3 body problem", "author": "Cixin Liu" }, { "title": "Elantris", "author": "Brandon Sanderson" }, { "title": "Our Mathematical Universe", "author": "Max Tegmark" } ]

You now have the data in the required format, all you need to do is save it in the database. Now I will just add this functionality in my API.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

app.post('/books/file', async (req, res) => {

if (!req.files || !req.files.file) {

res.status(404)

.send('File not found');

} else if (req.files.file.mimetype === 'text/csv') {

let csvFile = req.files.file;

data = convert(csvFile);

try {

const books = await db.Book.bulkCreate(data);

res.send(books);

} catch (err) {

res.send(err.message);

}

} else {

res.status(422)

.send(

util.apiResponse(0, toast.INVALID_FILE_FORMAT, {

err: 'File format is not valid',

}),

);

}

})

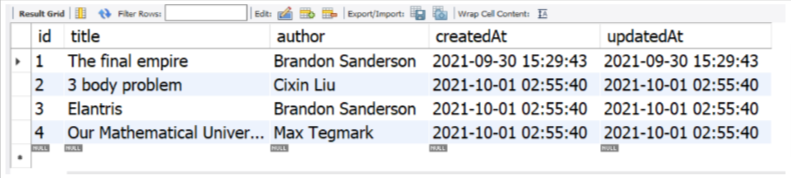

Now it will save the processed data. If I send the request and check the MYSQL workbench then I should have the data in my tables.

Behold, the data has been added from CSV without you ever needing to save the file on the server. If you’ve come this far then give yourself a pat on the back; this was a complicated one.

Good bye ✌✌