March 4, 2022

Text Summarization In NLP

DURATION

11mincategories

Tags

share

Text summarization is a very useful and important part of Natural Language Processing (NLP). First let us talk about what text summarization is. Suppose we have too many lines of text data in any form, such as from articles or magazines or on social media. We have time scarcity so we want only a nutshell report of that text. We can summarize our text in a few lines by removing unimportant text and converting the same text into smaller semantic text form.

Now let us see how we can implement NLP in our programming. We will take a look at all the approaches later, but here we will classify approaches of NLP.

Text Summarization

In this approach we build algorithms or programs which will reduce the text size and create a summary of our text data. This is called automatic text summarization in machine learning.

Text summarization is the process of creating shorter text without removing the semantic structure of text.

There are two approaches to text summarization.

Extractive approaches

Abstractive approaches

Extractive Approaches:

Using an extractive approach we summarize our text on the basis of simple and traditional algorithms. For example, when we want to summarize our text on the basis of the frequency method, we store all the important words and frequency of all those words in the dictionary. On the basis of high frequency words, we store the sentences containing that word in our final summary. This means the words which are in our summary confirm that they are part of the given text.

Abstractive Approaches:

An abstractive approach is more advanced. On the basis of time requirements we exchange some sentences for smaller sentences with the same semantic approaches of our text data.

Here we generally use deep machine learning, that is transformers, bi-directional transformers(BERT), GPT, etc.

Extractive Approaches:

We will take a look at a few machine learning models below.

Text summarization using the frequency method

In this method we find the frequency of all the words in our text data and store the text data and its frequency in a dictionary. After that, we tokenize our text data. The sentences which contain more high frequency words will be kept in our final summary data.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

import pandas as pd

import numpy as np

data = "my name is shubham kumar shukla. It is my pleasure to got opportunity to write article for xyz related to nlp"

from nltk.tokenize

import word_tokenize, sent_tokenize

from nltk.corpus

import stopwords

def solve(text):

stopwords1 = set(stopwords.words("english"))

words = word_tokenize(text)

freqTable = {}

for word in words:

word = word.lower()

if word in stopwords1:

continue

if word in freqTable:

freqTable[word] += 1

else:

freqTable[word] = 1

sentences = sent_tokenize(text)

sentenceValue = {}

for sentence in sentences:

for word, freq in freqTable.items():

if word in sentence.lower():

if sentence in sentenceValue:

sentenceValue[sentence] += freq

else:

sentenceValue[sentence] = freq

sumValues = 0

for sentence in sentenceValue:

sumValues += sentenceValue[sentence]

average = int(sumValues / len(sentenceValue))

summary = ''

for sentence in sentences:

if (sentence in sentenceValue) and(sentenceValue[sentence] > (1.2 * average)):

summary += "" + sentence

return summary

Sumy:

Sumy is a textrank based machine learning algorithm. Below is the implementation of that model.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

# Load Packages

from sumy.parsers.plaintext

import PlaintextParser

from sumy.nlp.tokenizers

import Tokenizer

# Creating text parser using tokenization

parser = PlaintextParser.from_string(text, Tokenizer("english"))

from sumy.summarizers.text_rank

import TextRankSummarizer

# Summarize using sumy TextRank

summarizer = TextRankSummarizer()

summary = summarizer(parser.document, 2)

text_summary = ""

for sentence in summary:

text_summary += str(sentence)

print(text_summary)

Lex Rank:

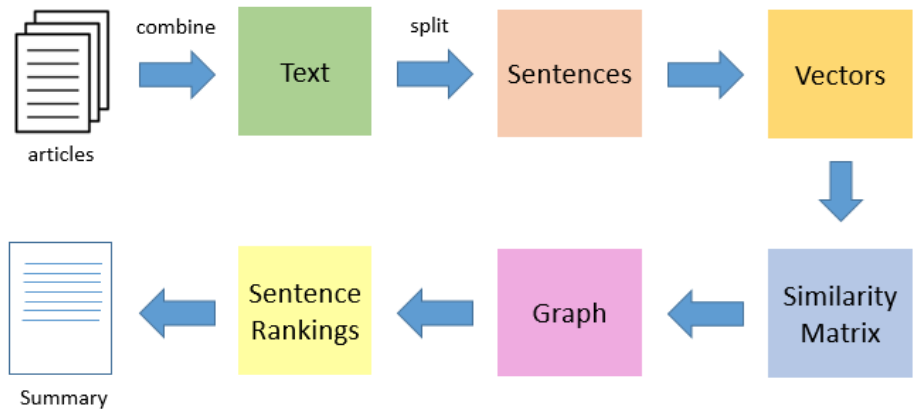

This is an unsupervised machine learning based approach in which we use the textrank approach to find the summary of our sentences. Using cosine similarity and vector based algorithms, we find minimum cosine distance among various words and store the more similar words together.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

from sumy.parsers.plaintext

import PlaintextParser

from sumy.nlp.tokenizers

import Tokenizer

from sumy.summarizers.lex_rank

import LexRankSummarizer

def sumy_method(text):

parser = PlaintextParser.from_string(text, Tokenizer("english"))

summarizer = LexRankSummarizer()

#Summarize the document with 2 sentences

summary = summarizer(parser.document, 2)

dp = []

for i in summary:

lp = str(i)

dp.append(lp)

final_sentence = ' '.join(dp)

return final_sentence

Using Luhn:

This approach is based on the frequency method; here we find TF-IDF (term frequency inverse document frequency).

1

2

3

4

5

6

7

8

9

10

11

12

from sumy.summarizers.luhn

import LuhnSummarizer

def lunh_method(text):

parser = PlaintextParser.from_string(text, Tokenizer("english"))

summarizer_luhn = LuhnSummarizer()

summary_1 = summarizer_luhn(parser.document, 2)

dp = []

for i in summary_1:

lp = str(i)

dp.append(lp)

final_sentence = ' '.join(dp)

return final_sentence

LSA

Latent Semantic Analyzer (LSA) is based on decomposing the data into low dimensional space. LSA has the ability to store the semantic of given text while summarizing.

1

2

3

4

5

6

7

8

9

10

11

12

from sumy.summarizers.lsa

import LsaSummarizer

def lsa_method(text):

parser = PlaintextParser.from_string(text, Tokenizer("english"))

summarizer_lsa = LsaSummarizer()

summary_2 = summarizer_lsa(parser.document, 2)

dp = []

for i in summary_2:

lp = str(i)

dp.append(lp)

final_sentence = ' '.join(dp)

return final_sentence